NLP Course | For You

This is an extension to the (ML for) Natural Language Processing course I teach at the Yandex School of Data Analysis (YSDA) since fall 2018 (from 2022, in Israel branch). For now, only part of the topics is likely to be covered here.

This new format of the course is designed for:

- convenience

Easy to find, learn or recap material (both standard and more advanced), and to try in practice. - clarity

Each part, from front to back, is a result of my care not only about what to say, but also how to say and, especially, how to show something. - you

I wanted to make these materials so that you (yes, you!) could study on your own, what you like, and at your pace. My main purpose is to help you enter your own very personal adventure. For you.

If you want to use the materials (e.g., figures) in your paper/report/whatnot and to cite this course, you can do this using the following BibTex:

What's inside: A Guide to Your Adventure

Lectures-blogs

which I tried to make:

- intuitive, clear and engaging;

- complete: full lecture and more,

- up-to-date with the field.

Bonus:

Seminars & Homeworks

For each topic, you can take notebooks from our 8.8k-☆ course repo.

From 2020, both PyTorch and Tensorflow!

Interactive parts & Exercises

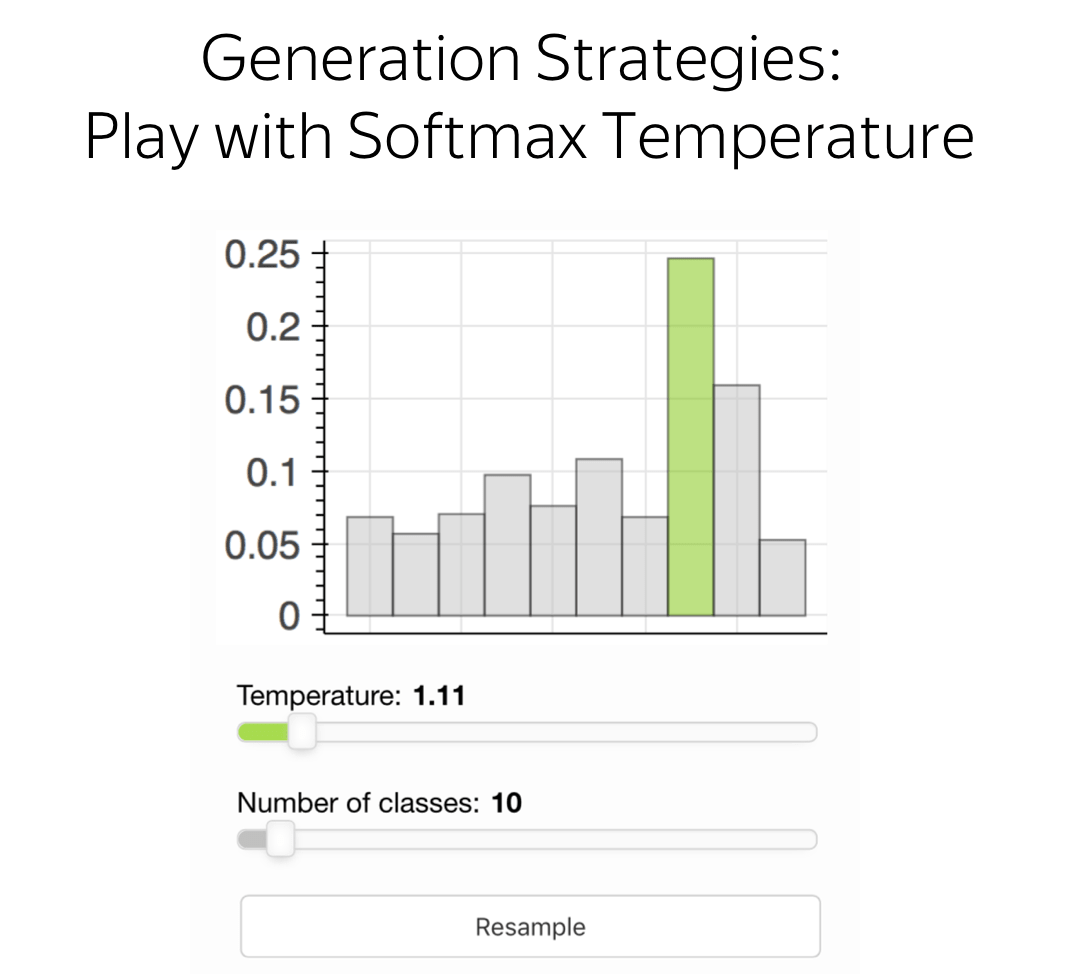

Often I ask you to go over "slides" visualizing some process, play with something or just think.

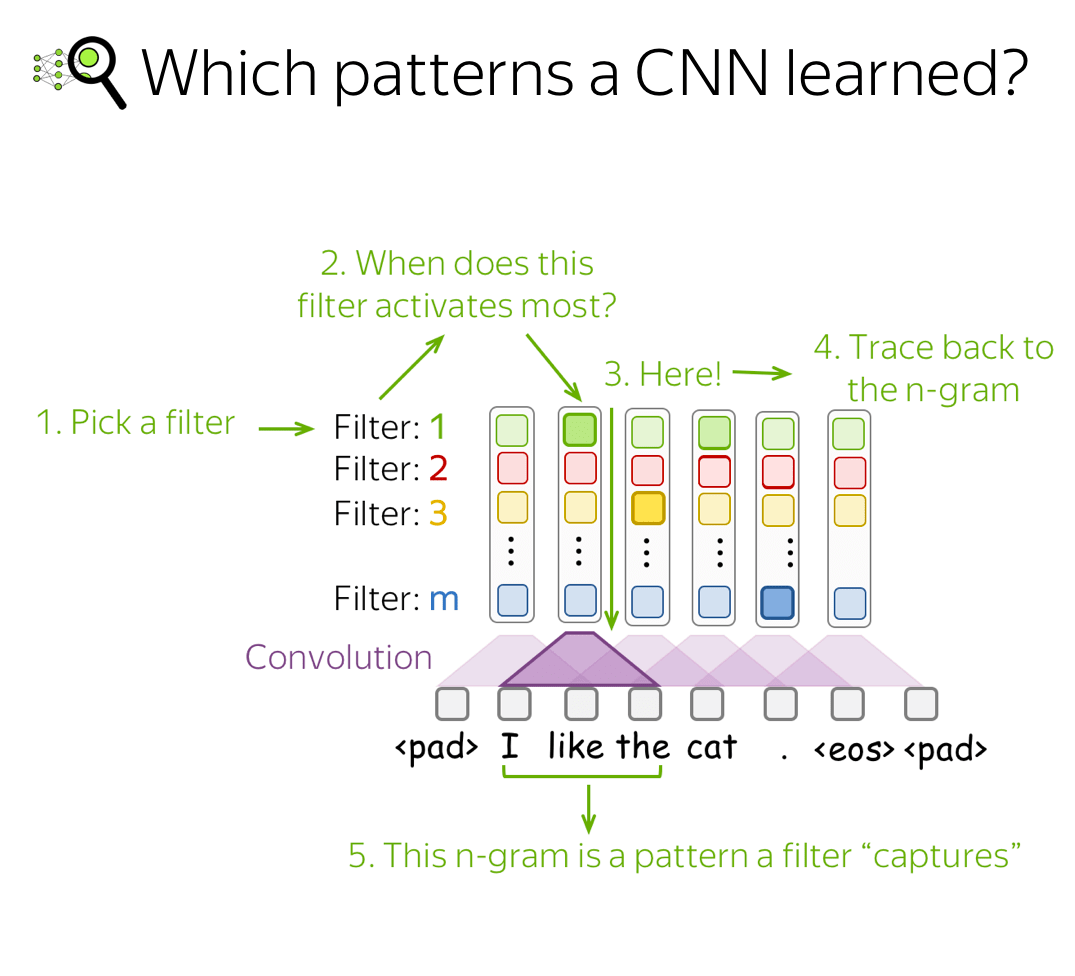

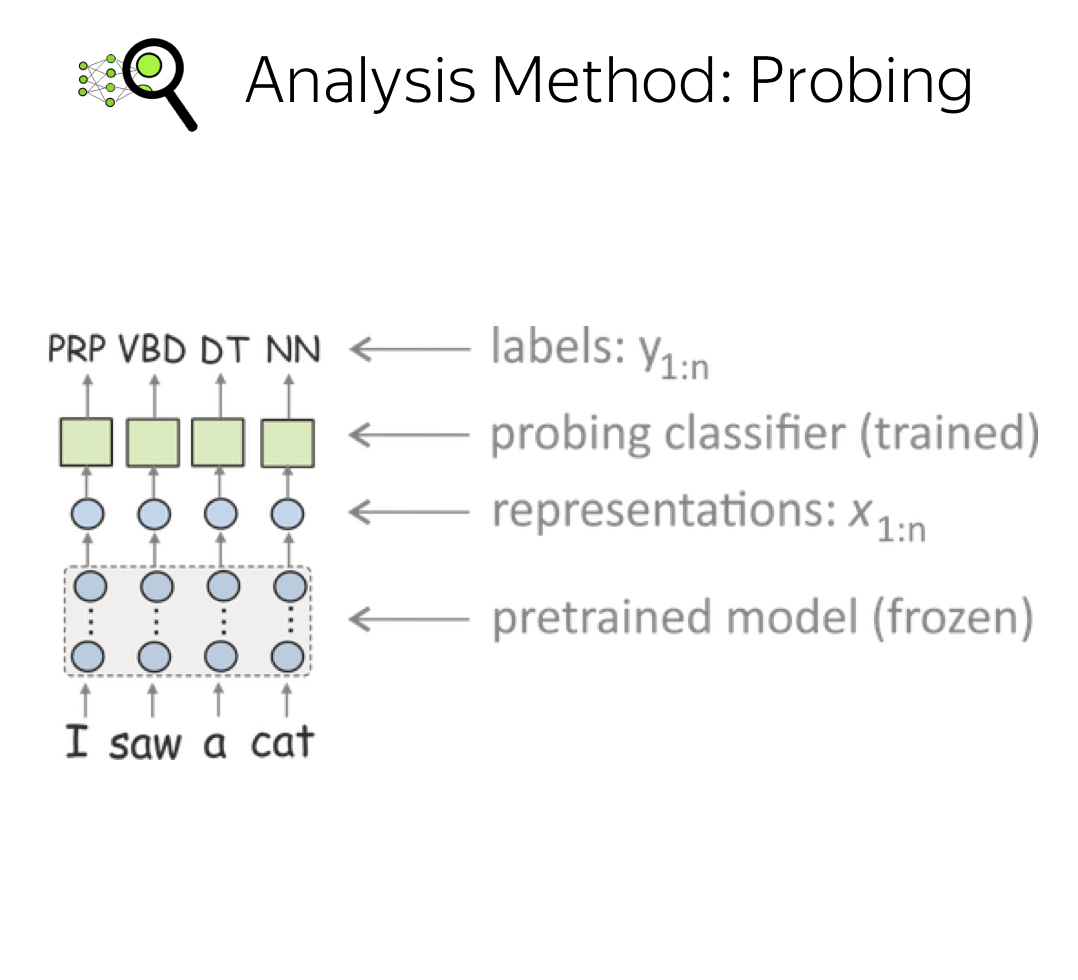

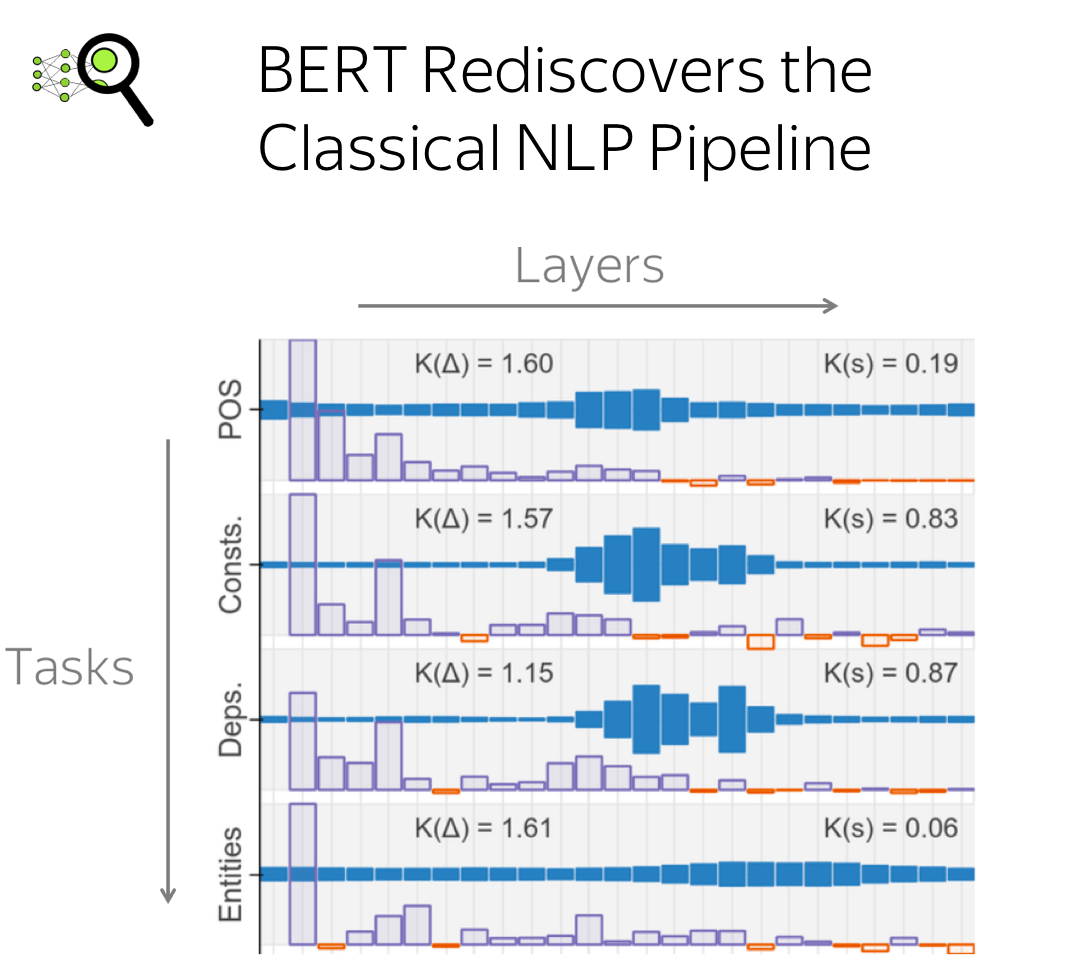

Analysis and Interpretability

Since 2020, top NLP conferences (ACL, EMNLP) have the "Analysis and Interpretability" area: one more confirmation that analysis is an integral part of NLP.

Each lecture has a section with relevant results on internal workings of models and methods.

Research Thinking

Learn to think as a research scientist:

- find flaws in an approach,

- think why/when something can help,

- come up with ways to improve,

- learn about previous attempts.

It's well-known that you will learn something easier if you are not just given the answer right away, but if you think about it first. Even if you don't want to be a researcher, this is still a good way to learn things!

Here I define the starting point: something you already know.

? Why this or that can be useful?

Possible answers

Existing solutions

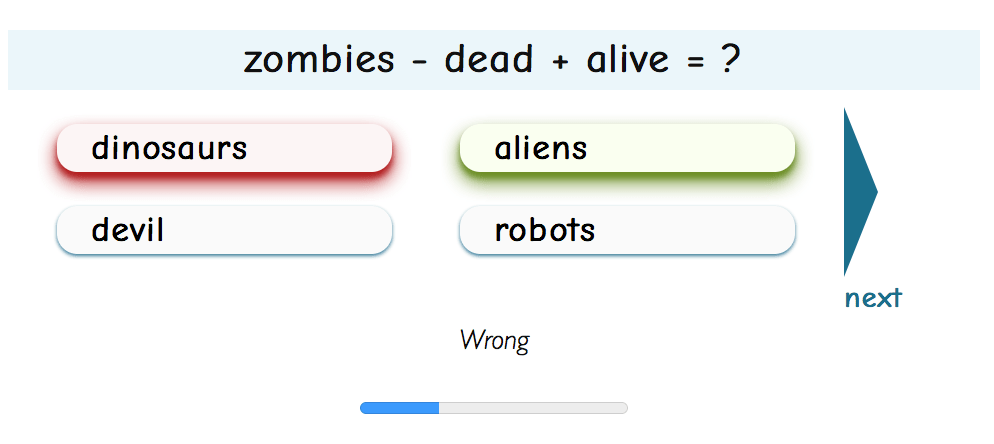

Have Fun!

Just fun.

Here you'll see some NLP games related to a lecture topic.

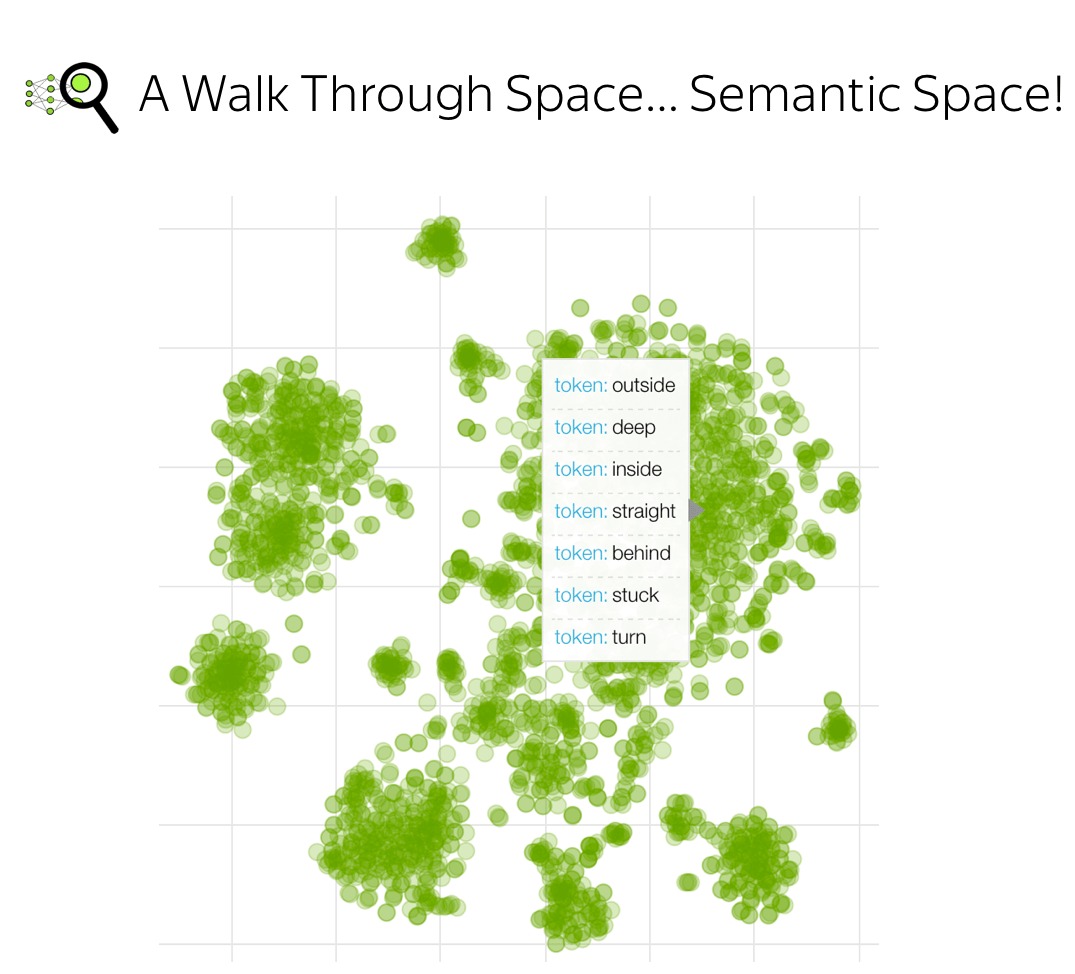

Week 1: Semantic Space Surfer

Course

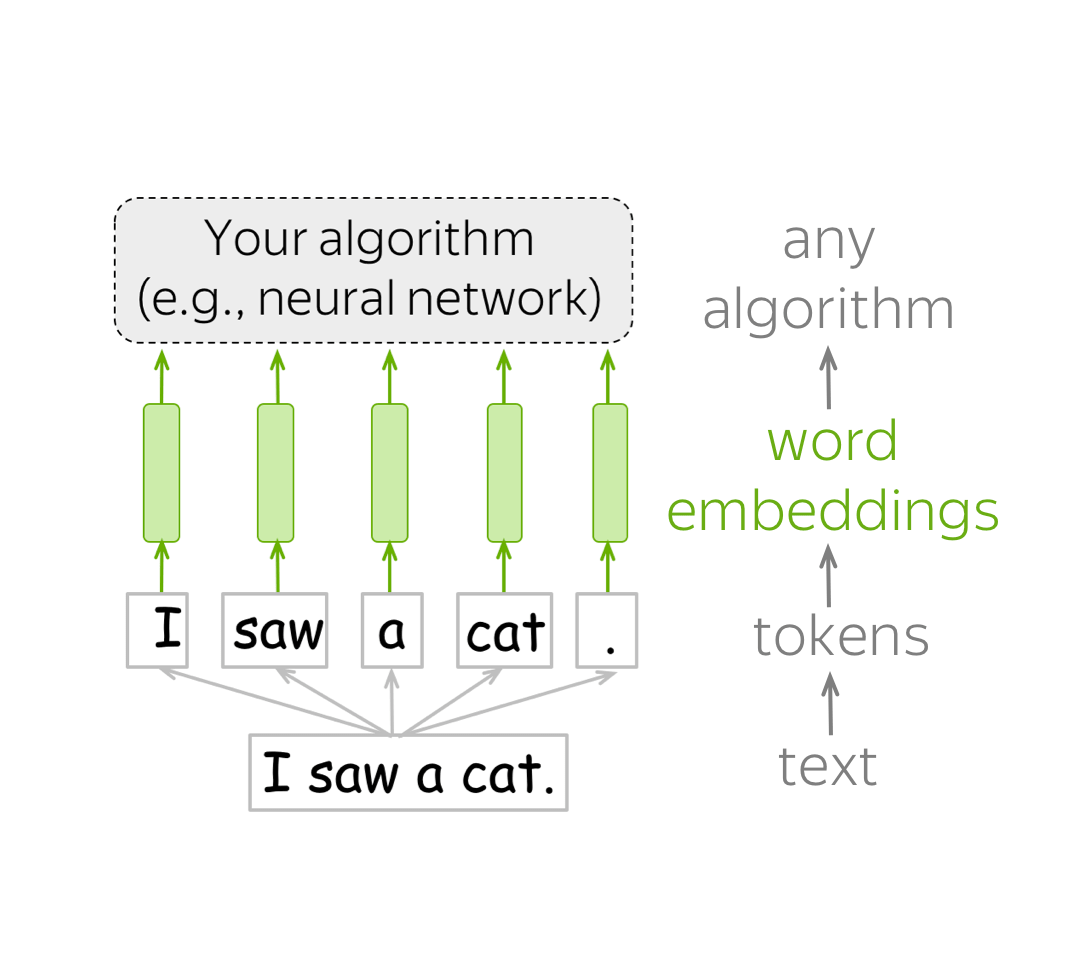

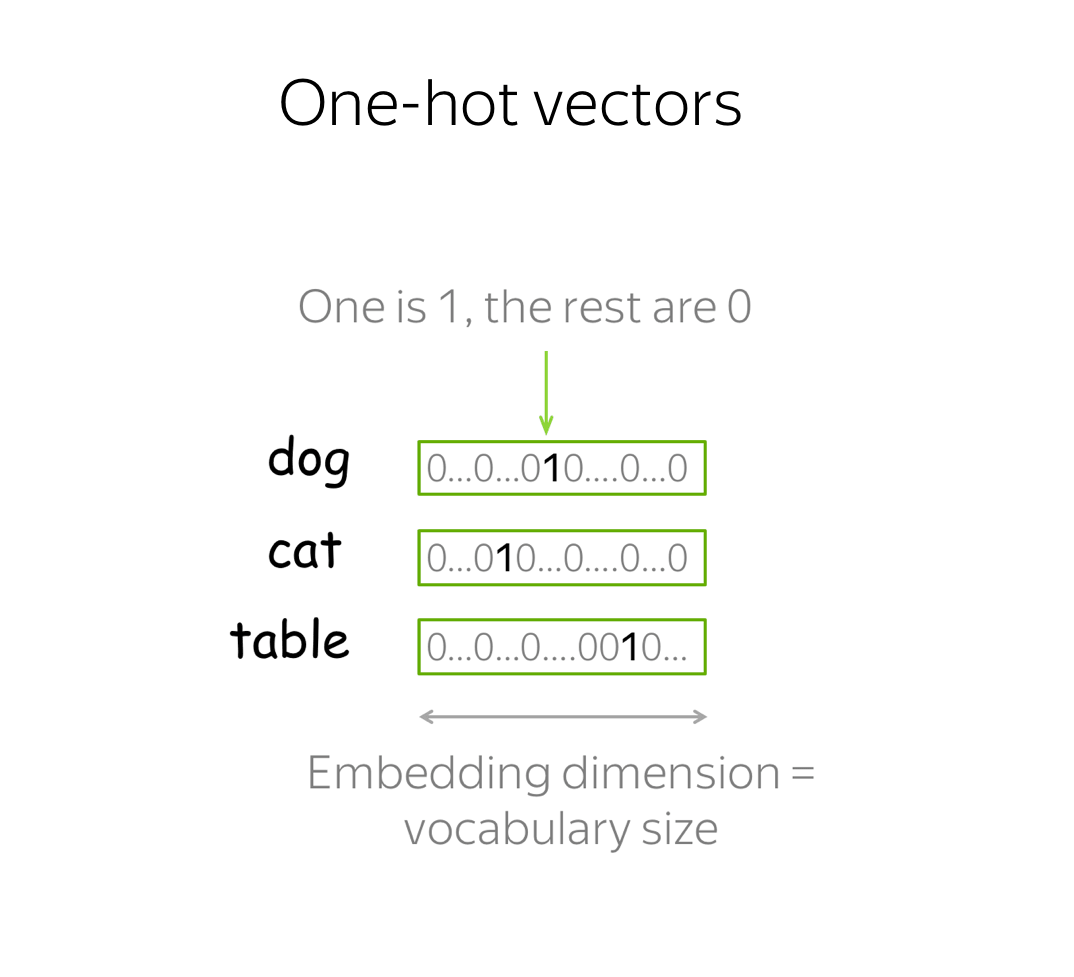

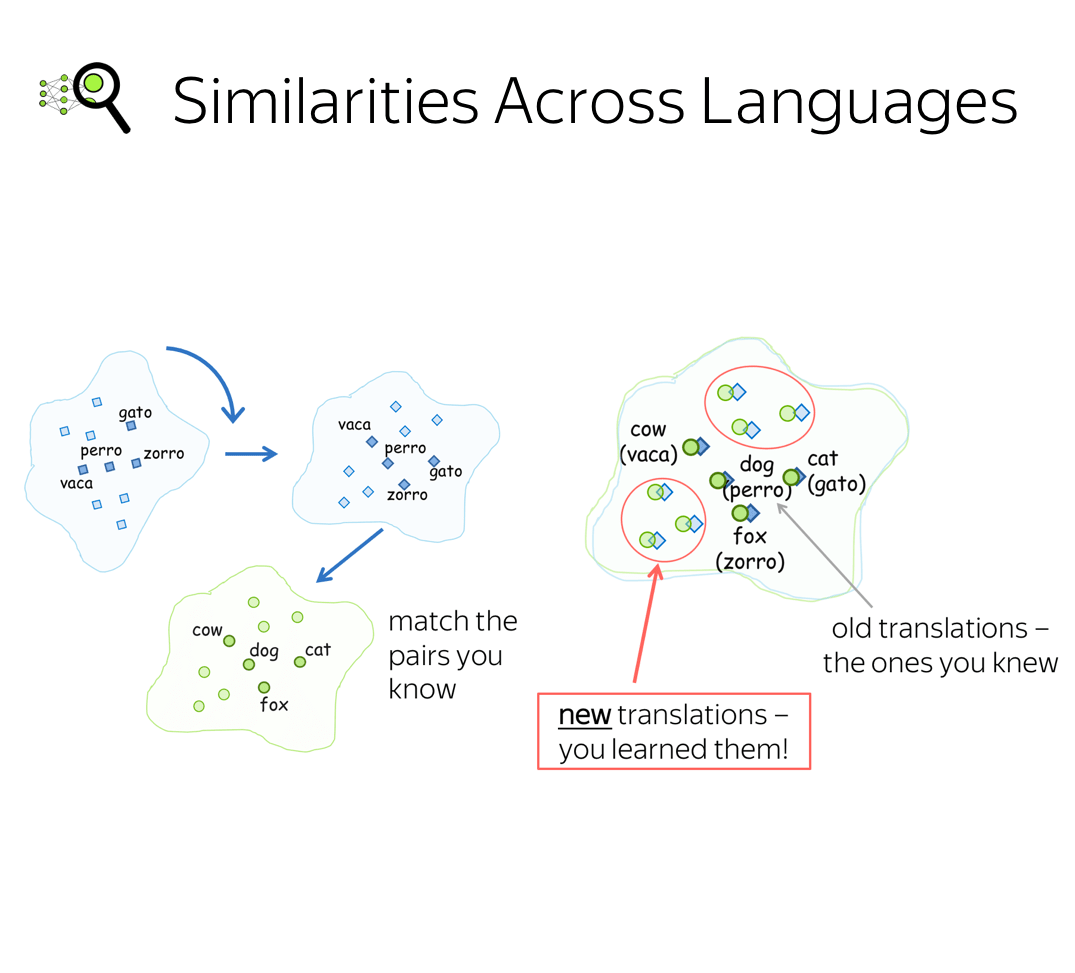

Word Embeddings

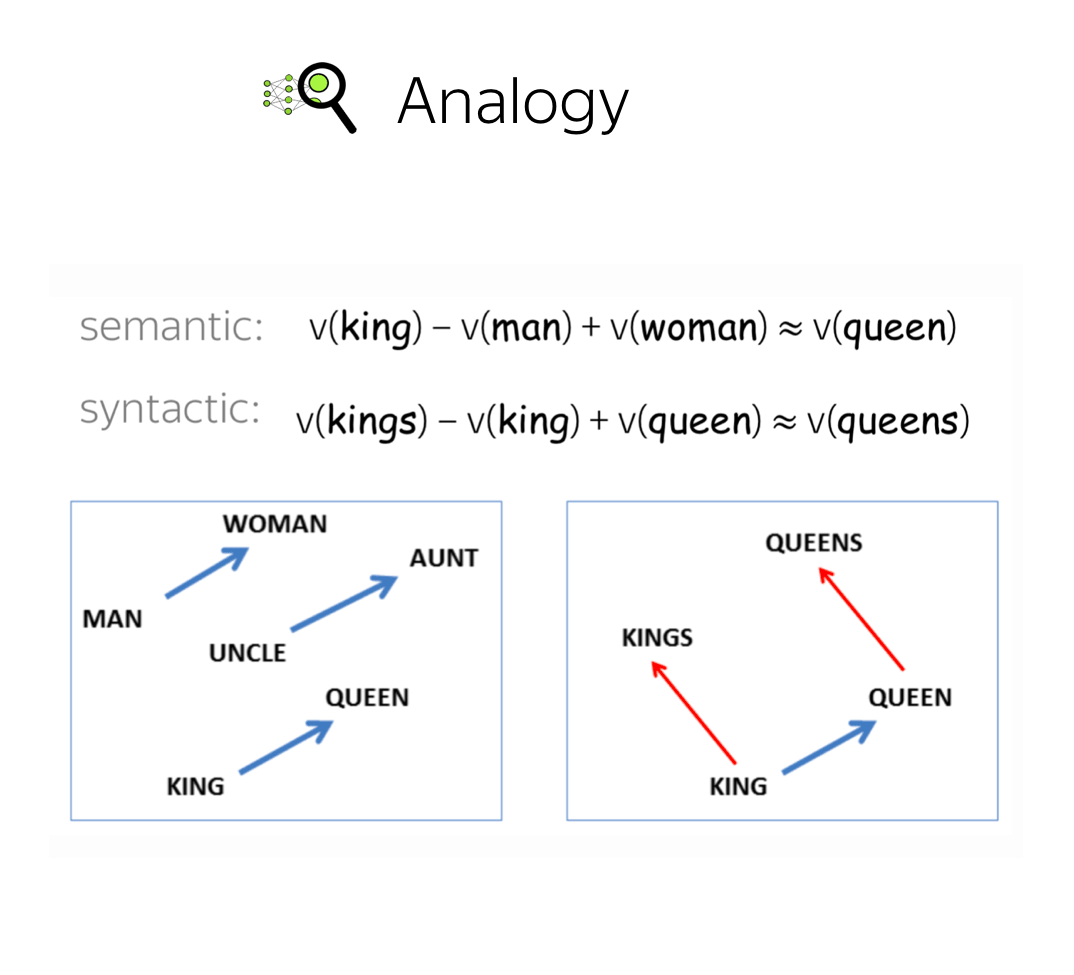

- Distributional semantics

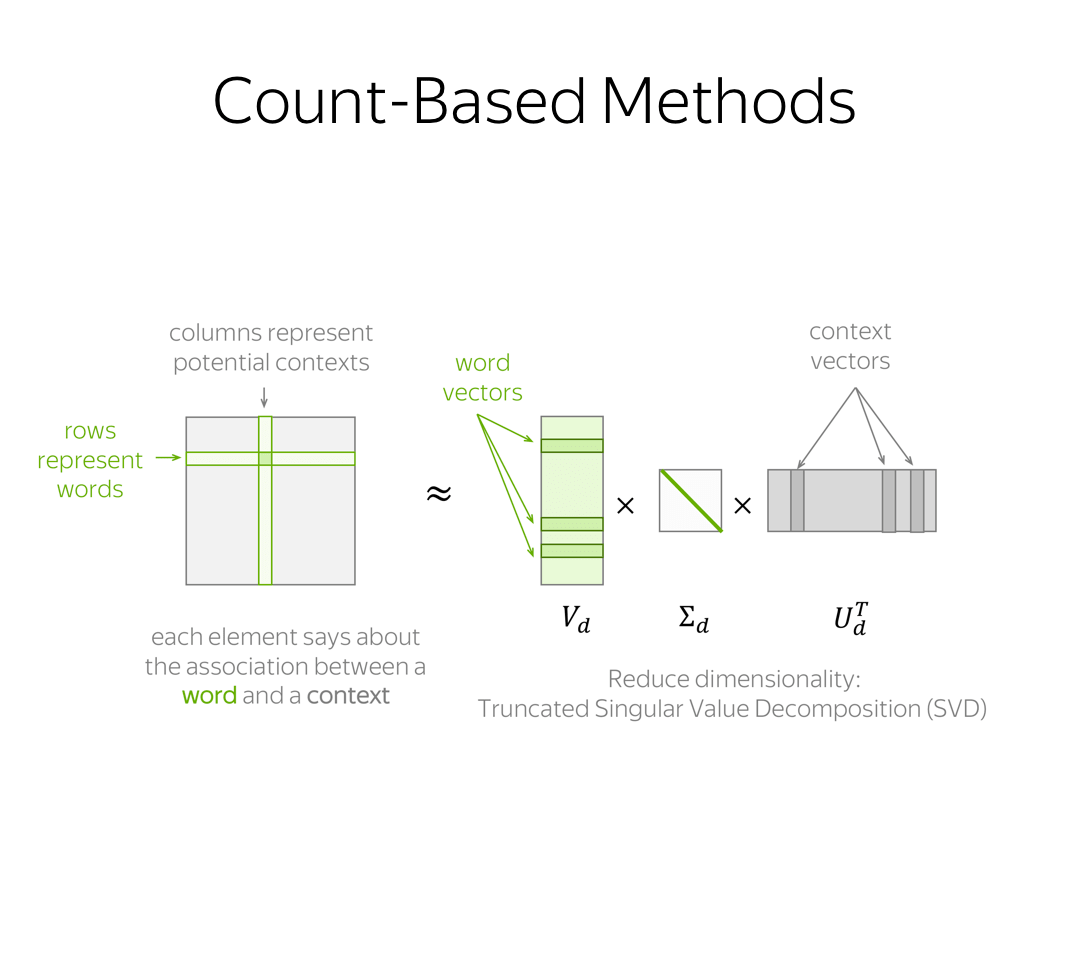

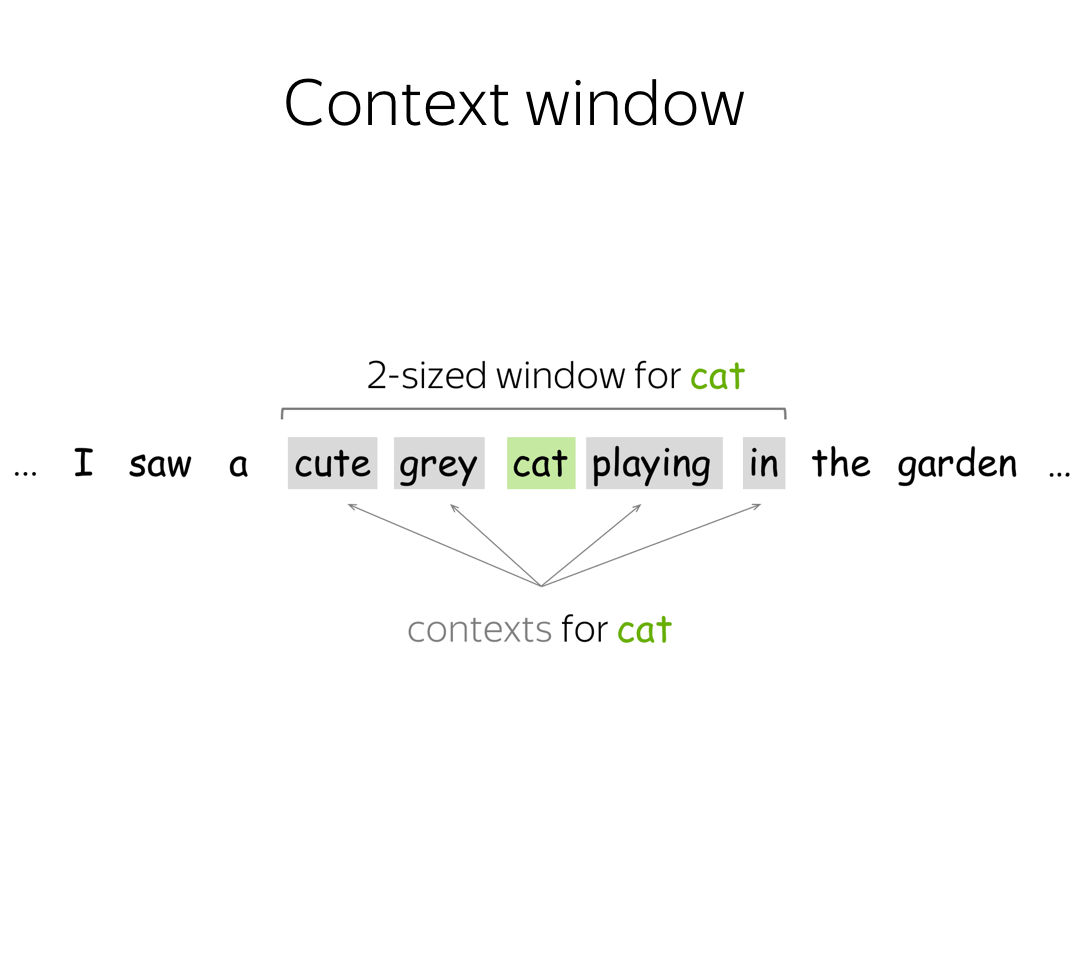

- Count-based (pre-neural) methods

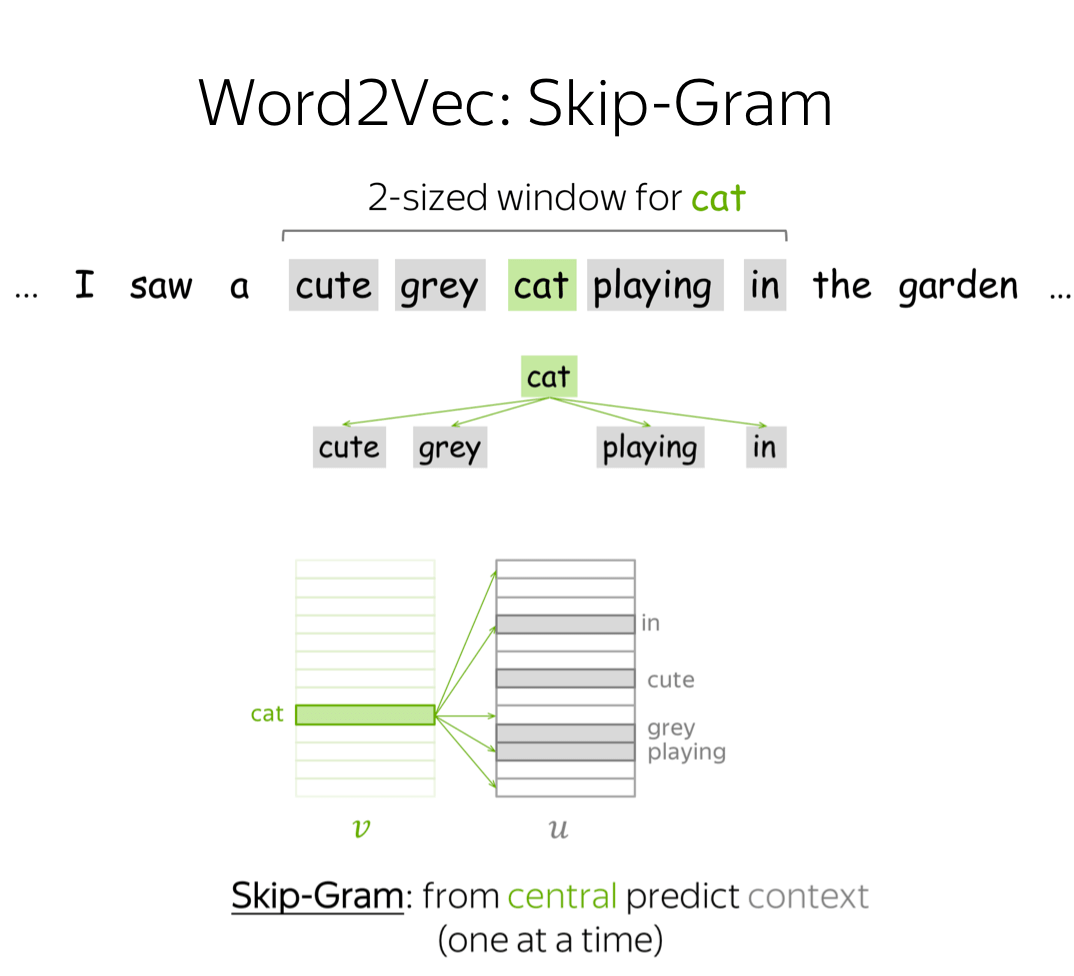

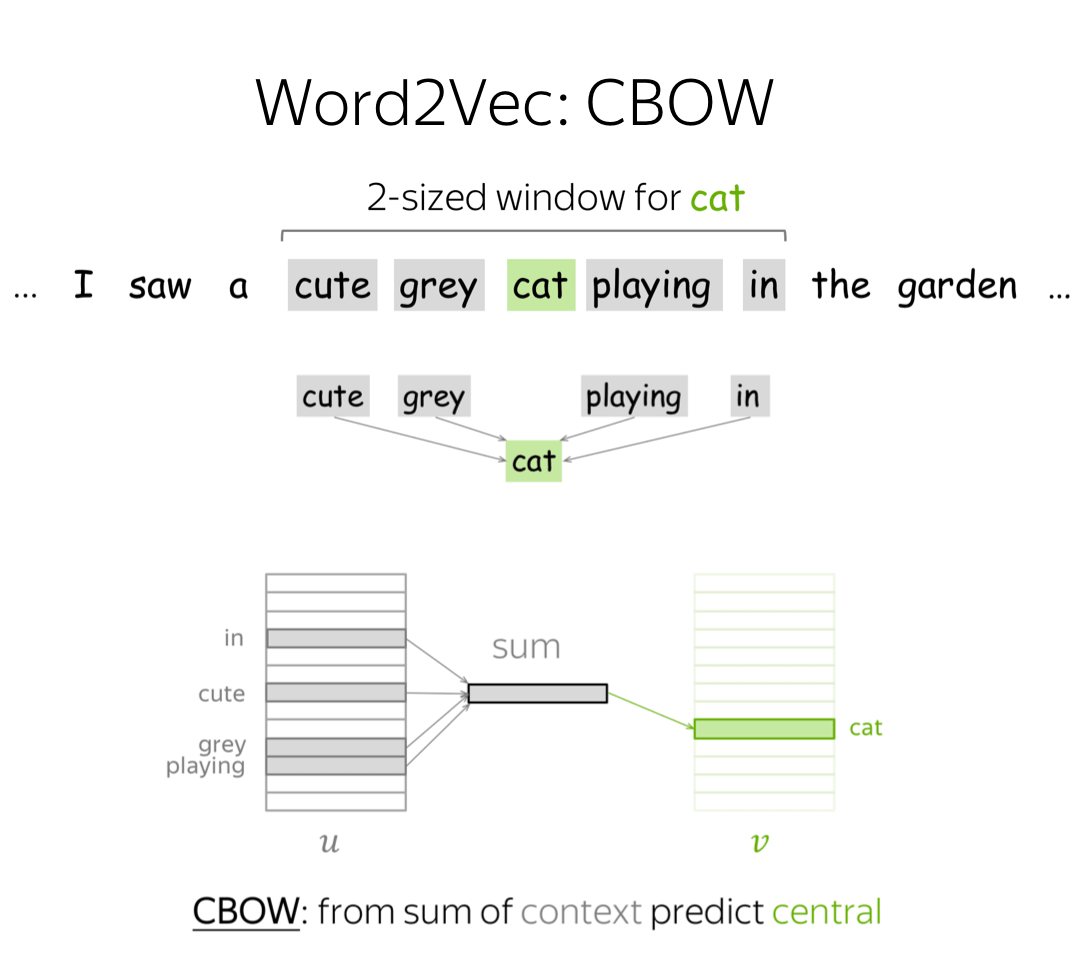

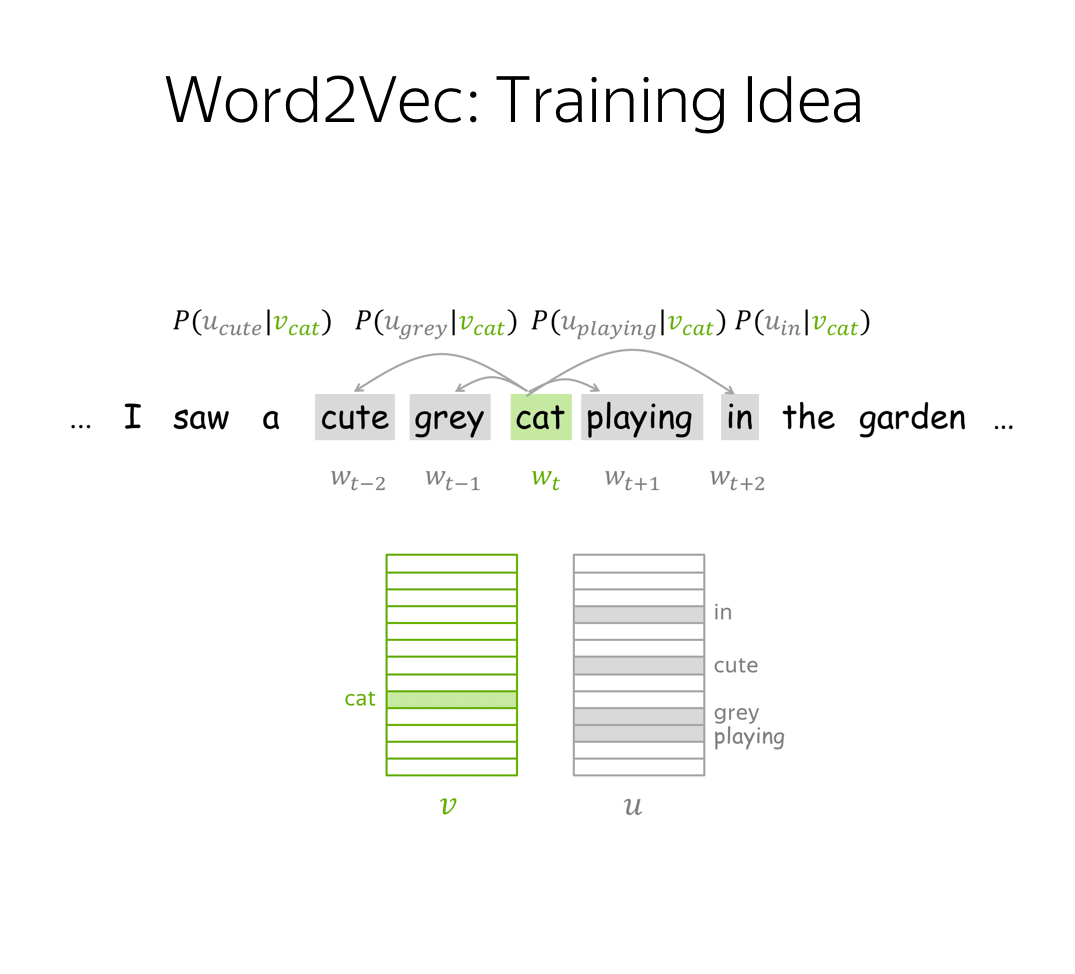

- Word2Vec: learn vectors

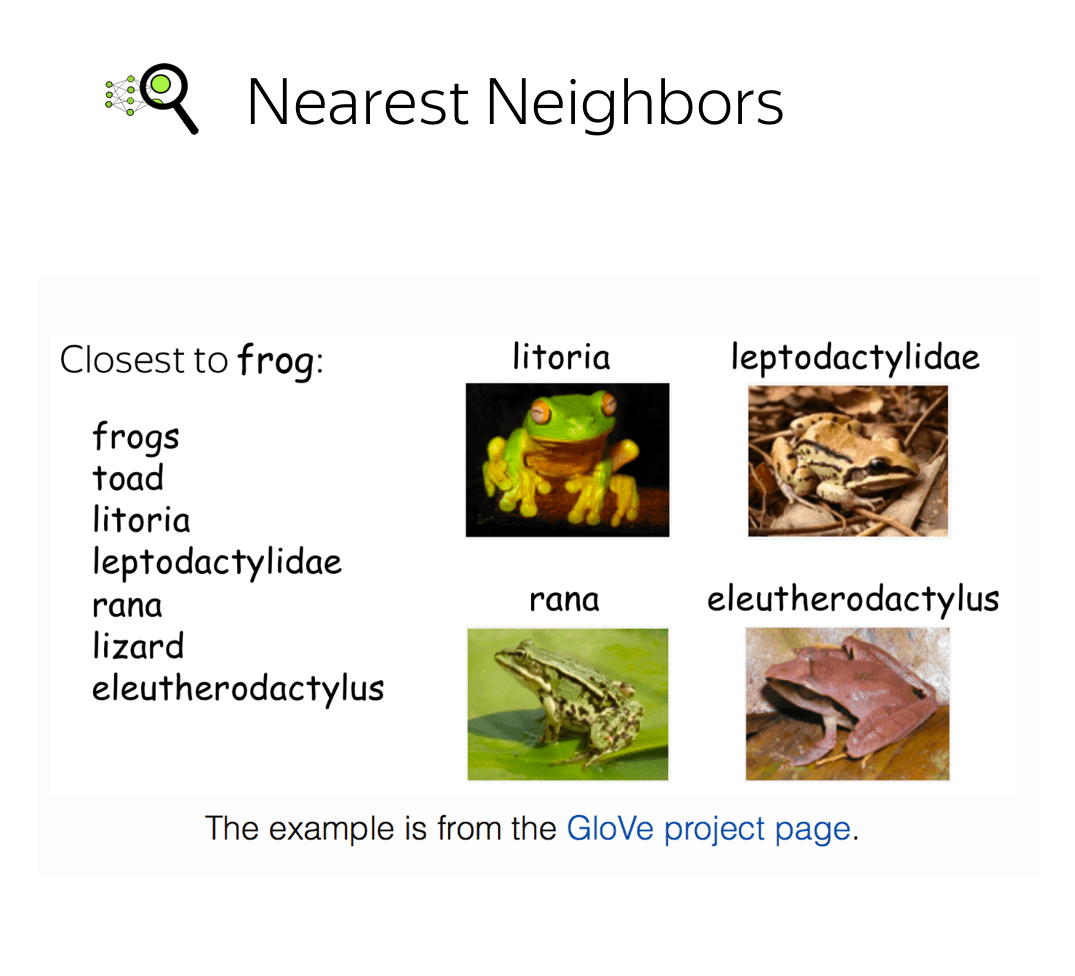

- GloVe: count, then learn

- Evaluation: intrinsic vs extrinsic

Analysis and Interpretability

Analysis and Interpretability- Bonus:

Seminar & Homework

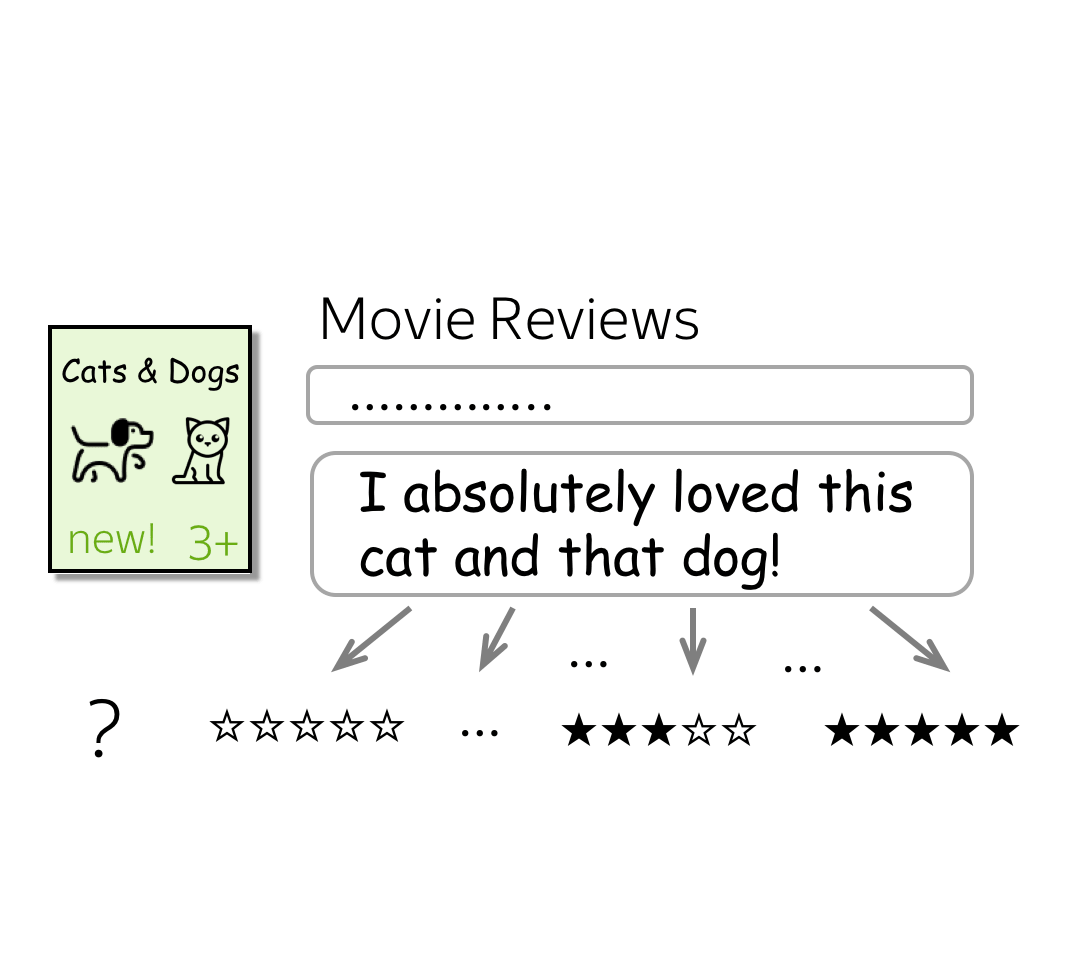

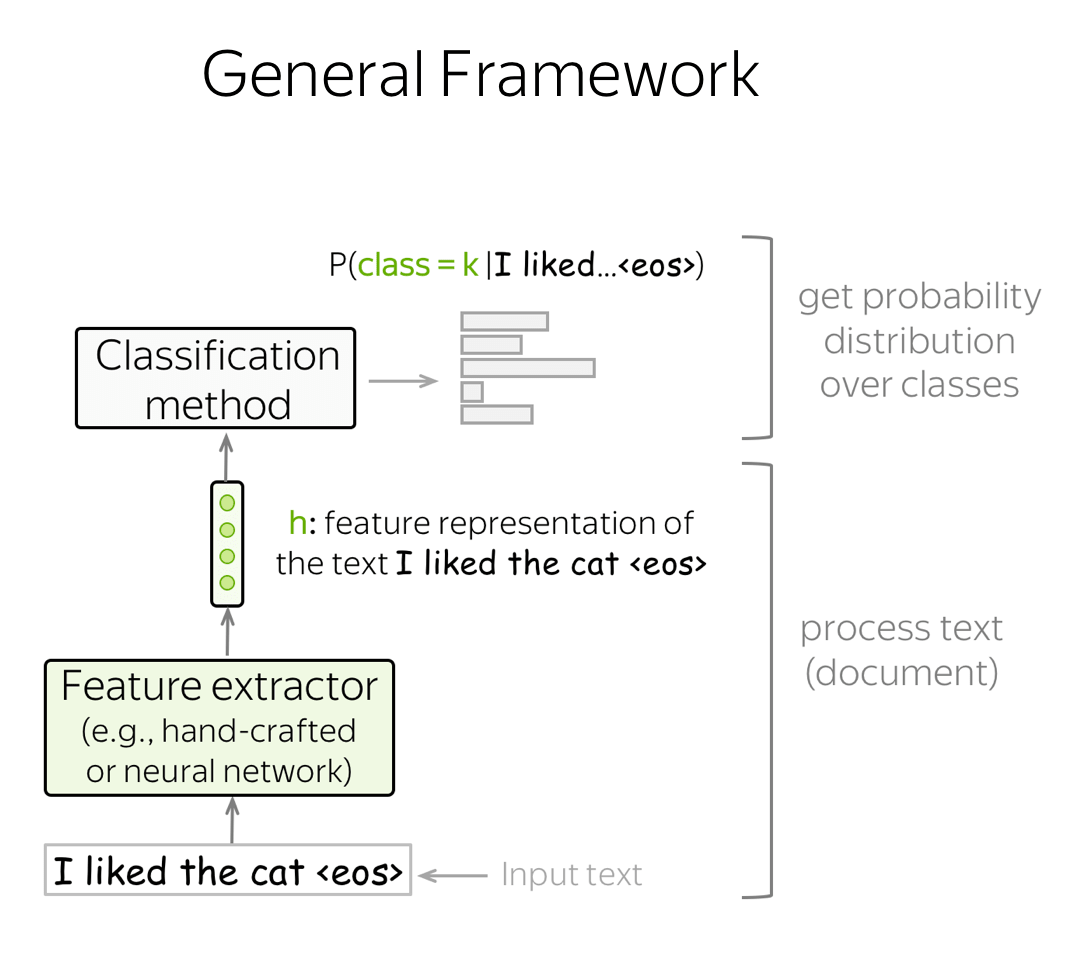

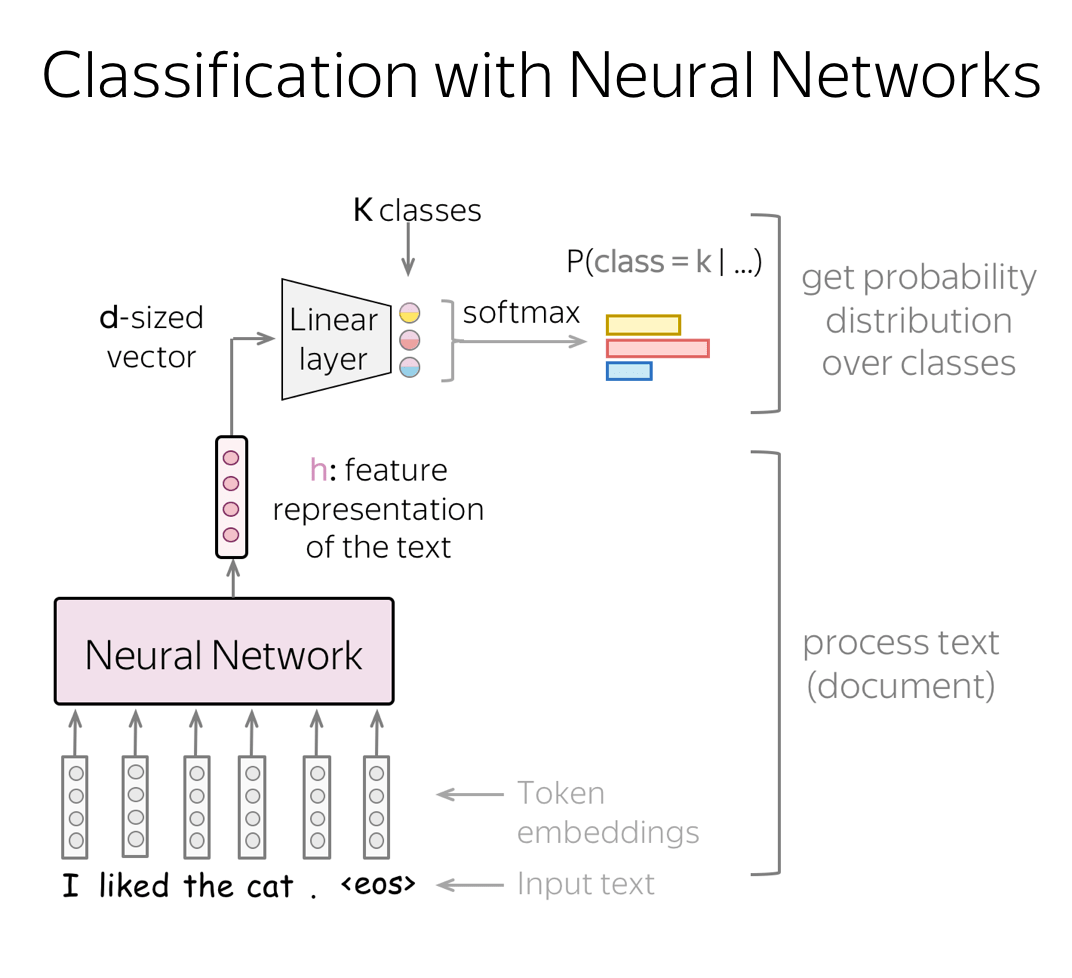

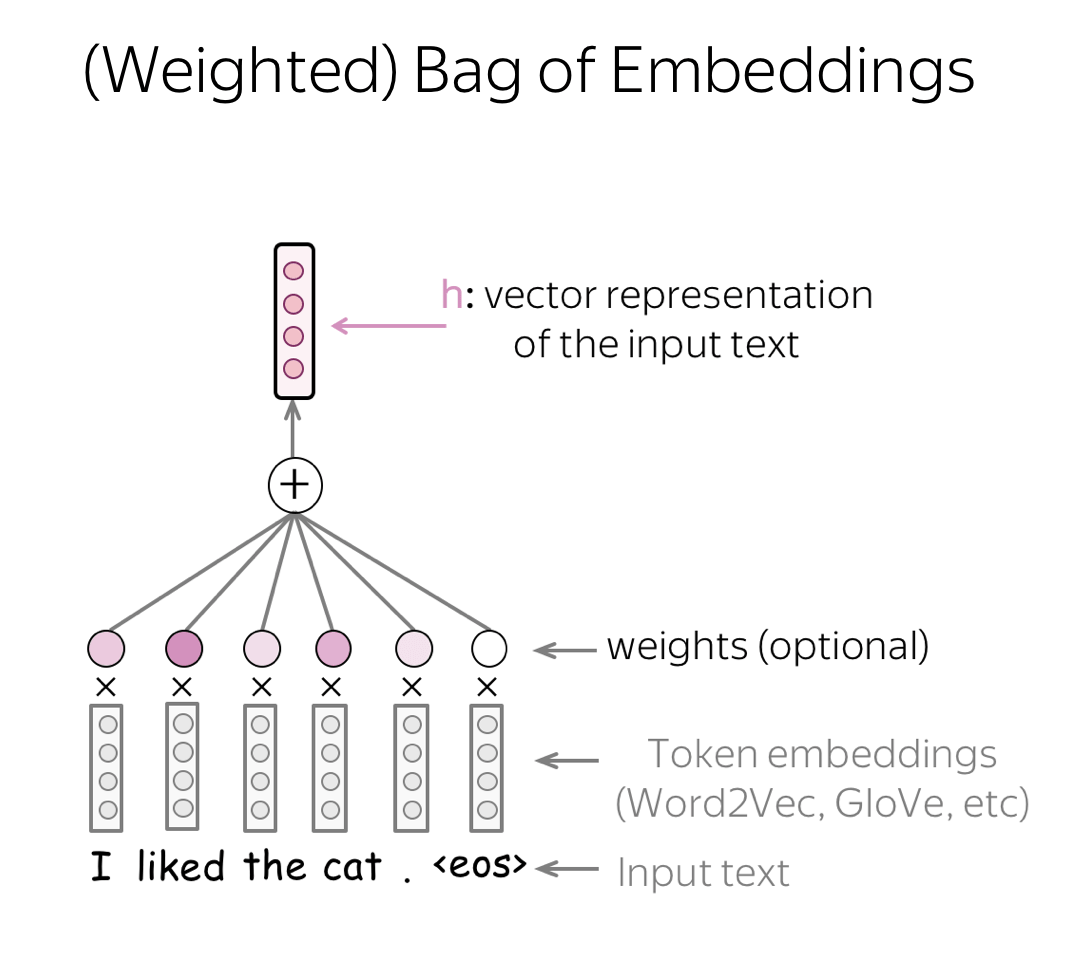

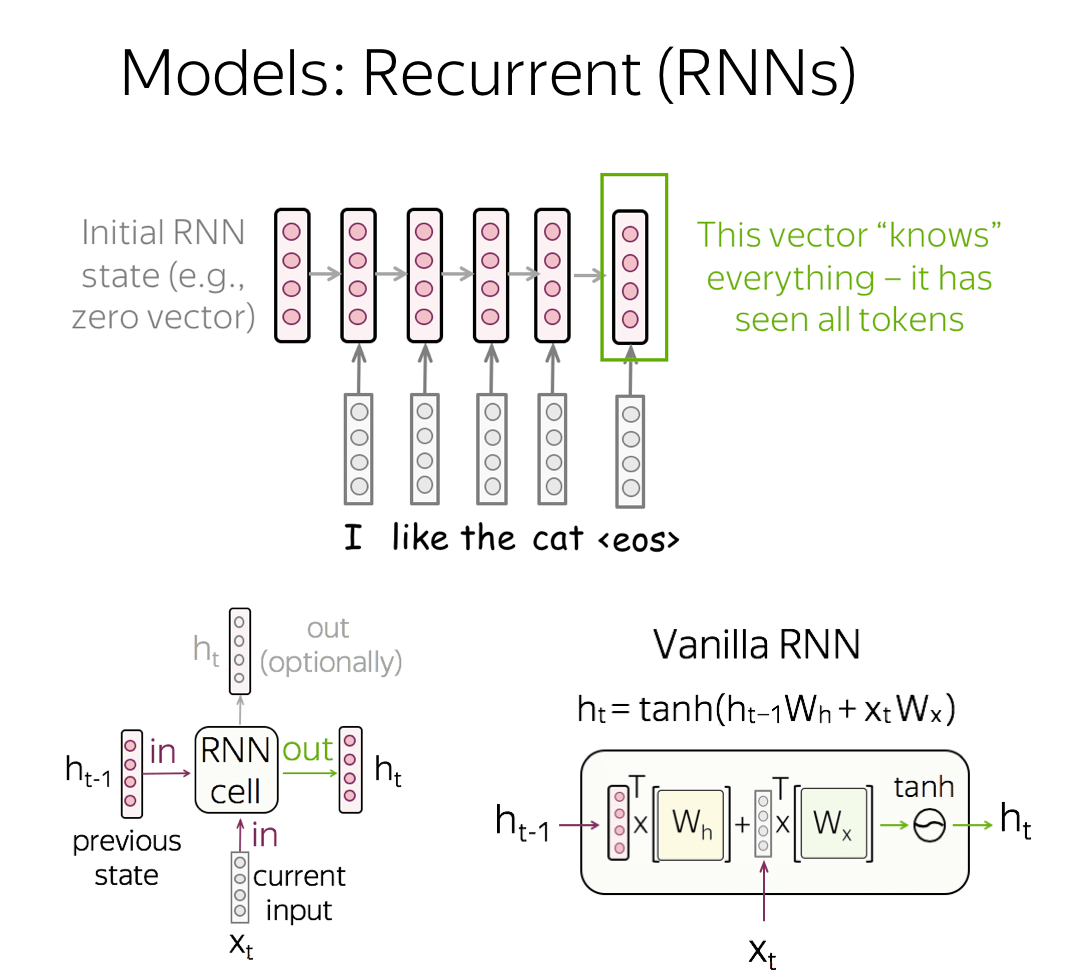

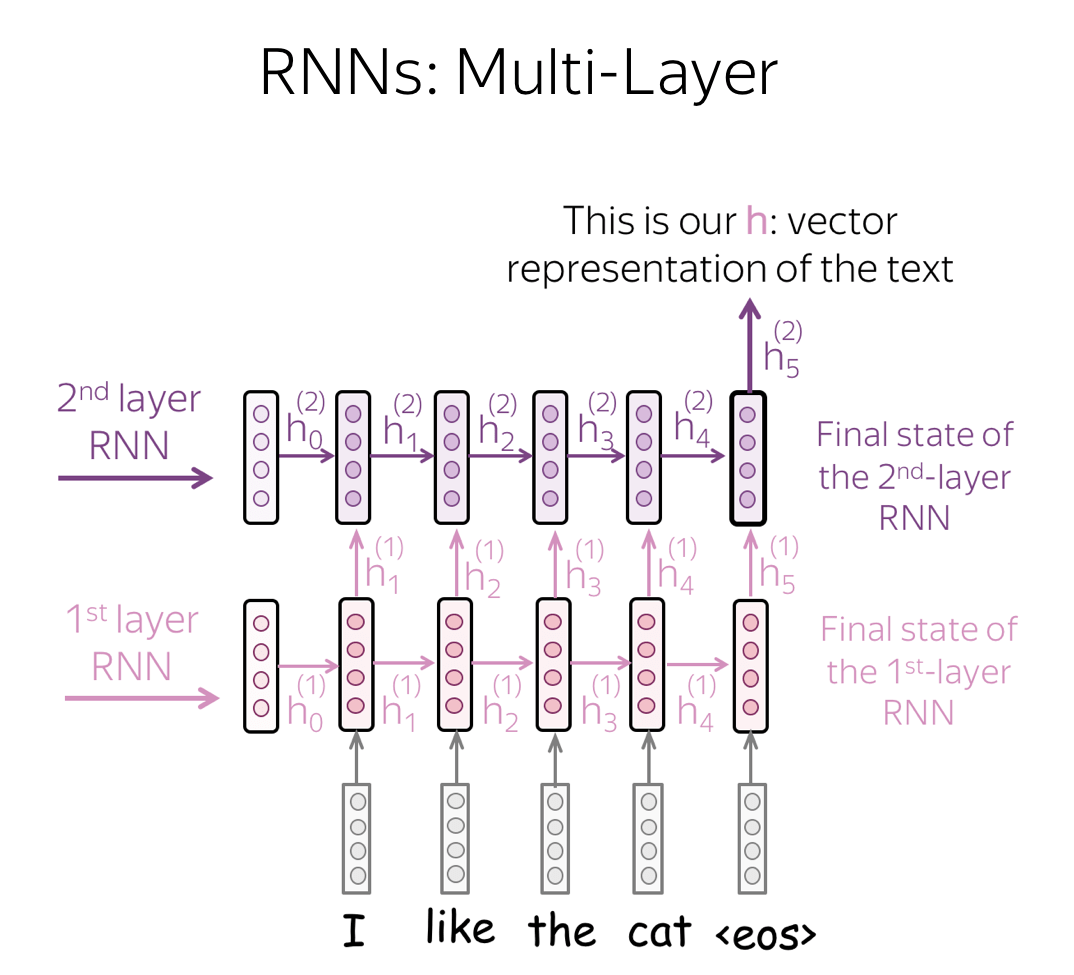

Text Classification

- Intro and Datasets

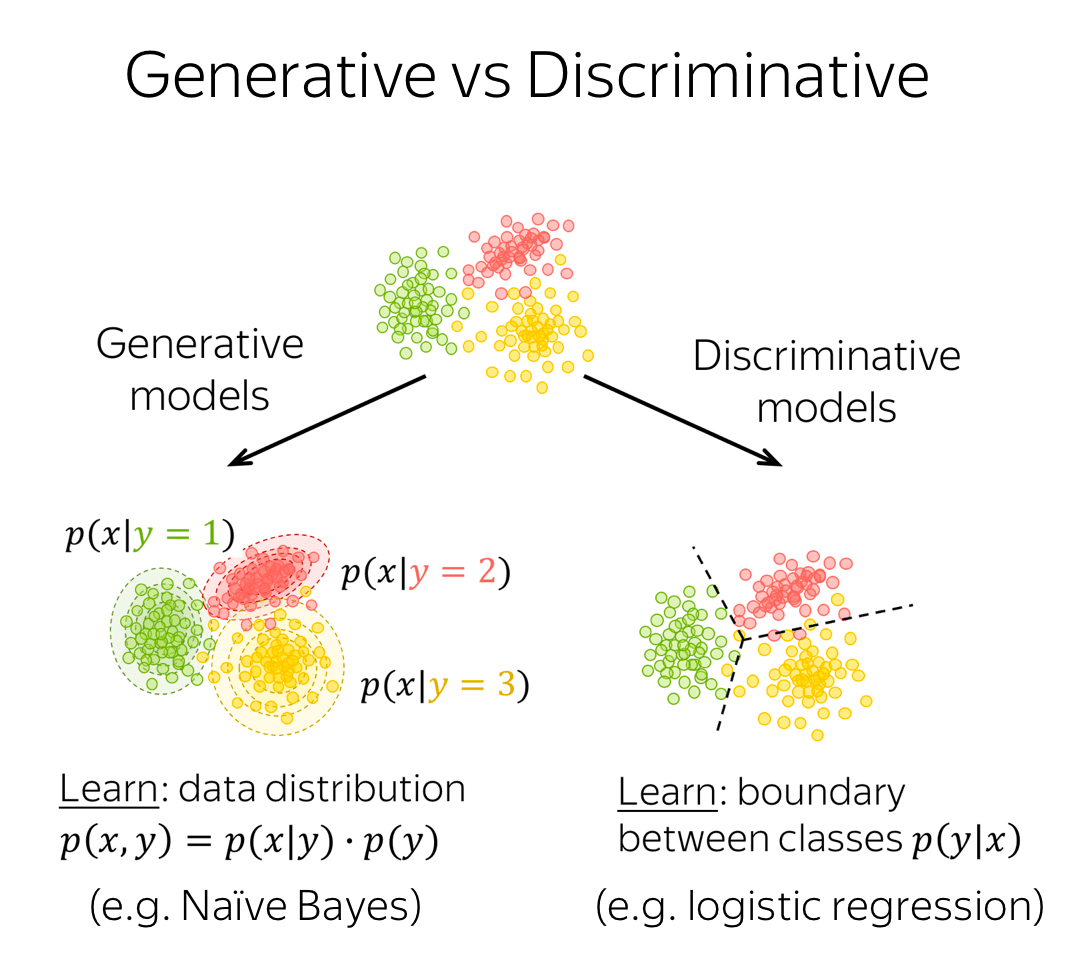

- General Framework

- Classical Approaches: Naive Bayes, MaxEnt (Logistic Regression), SVM

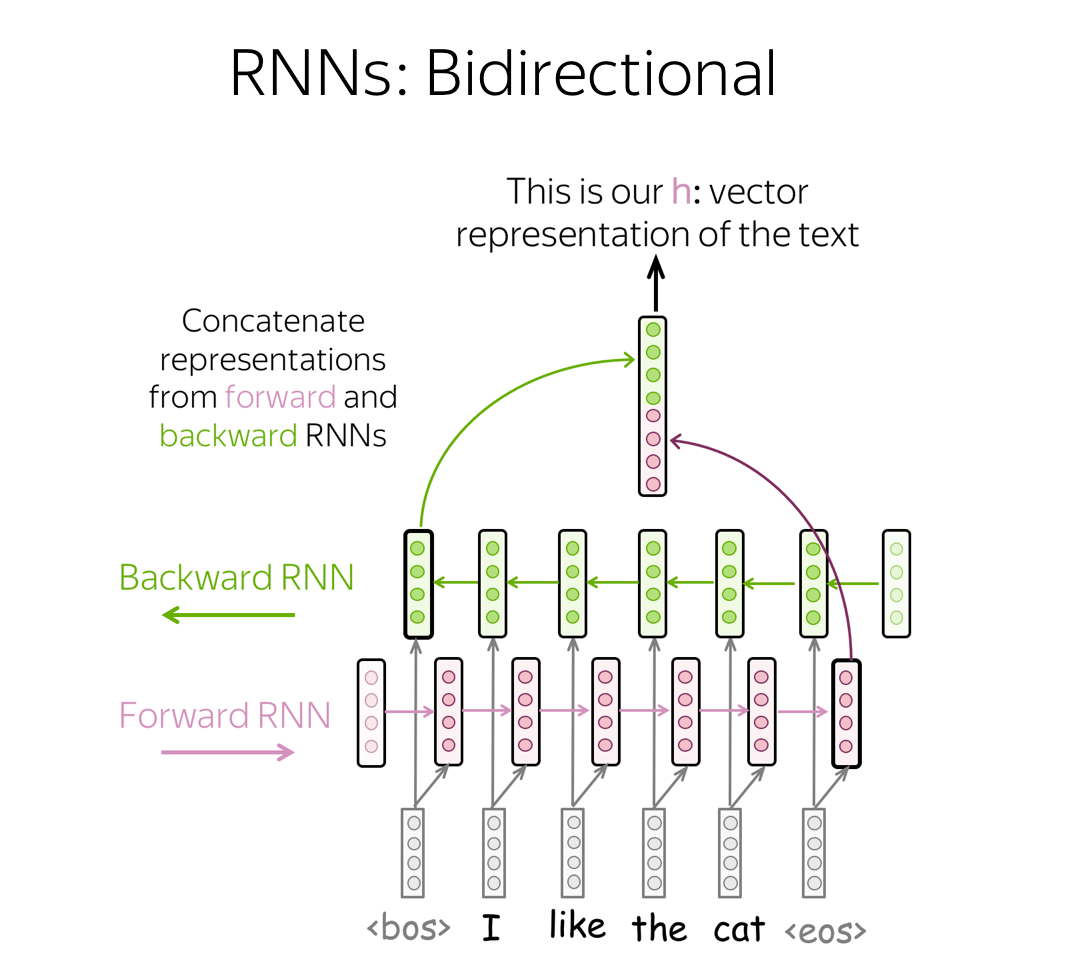

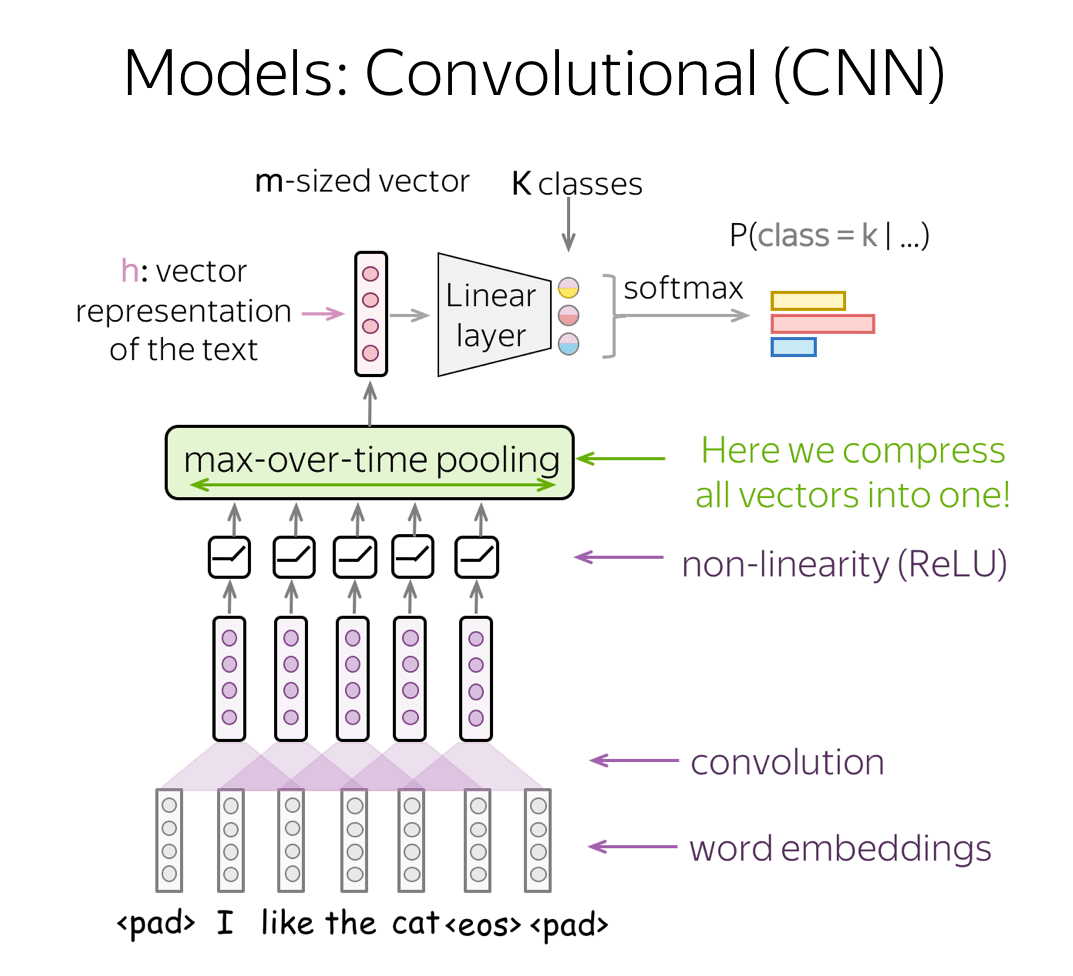

- Neural Networks: RNNs and CNNs

Analysis and Interpretability

Analysis and Interpretability- Bonus:

Seminar & Homework

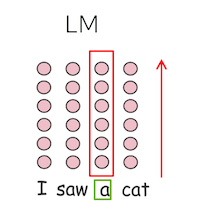

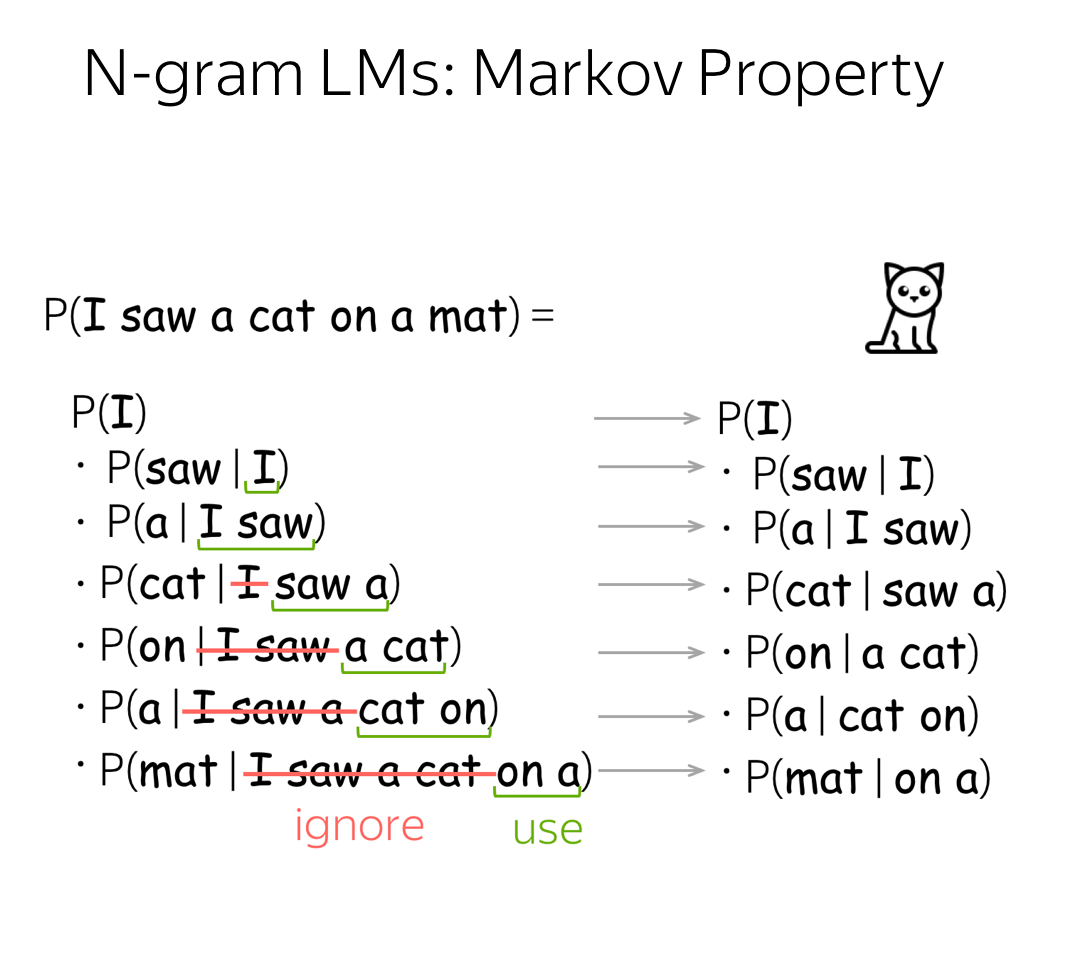

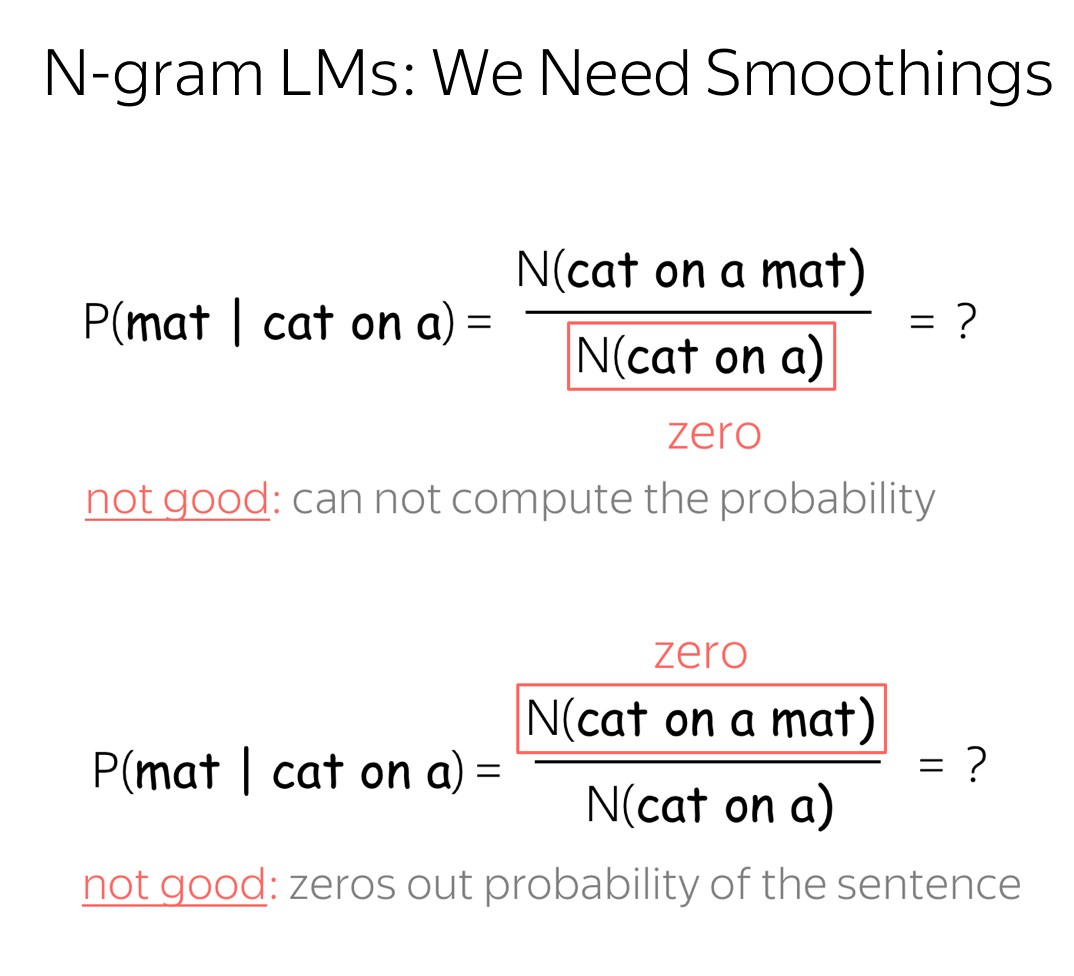

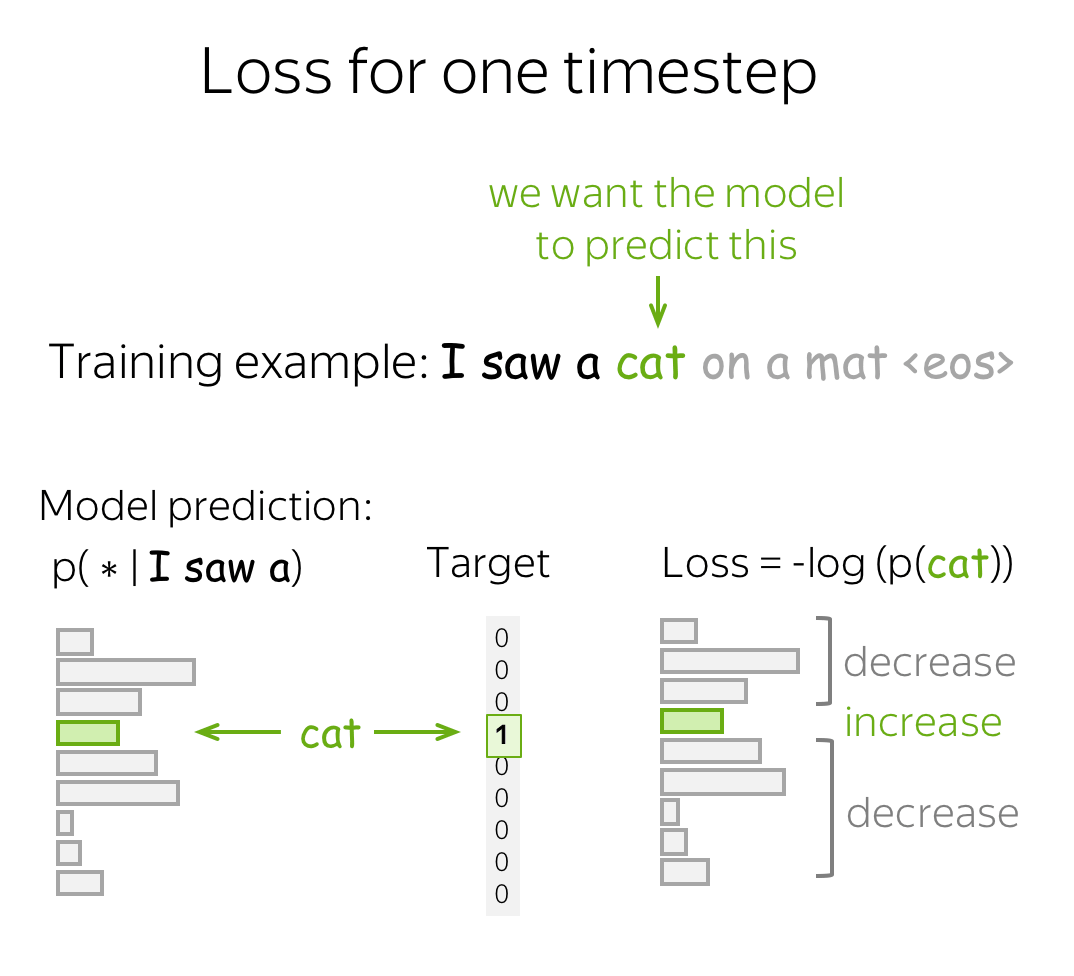

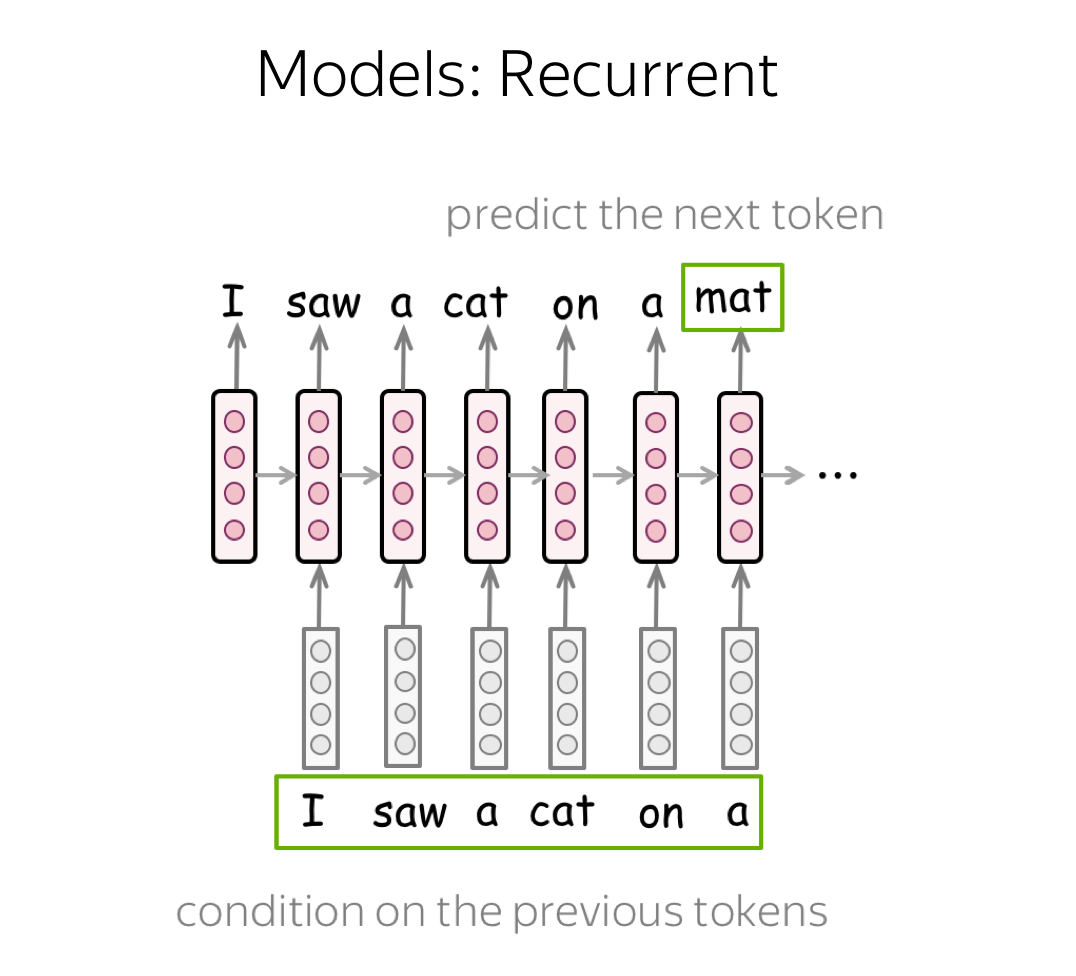

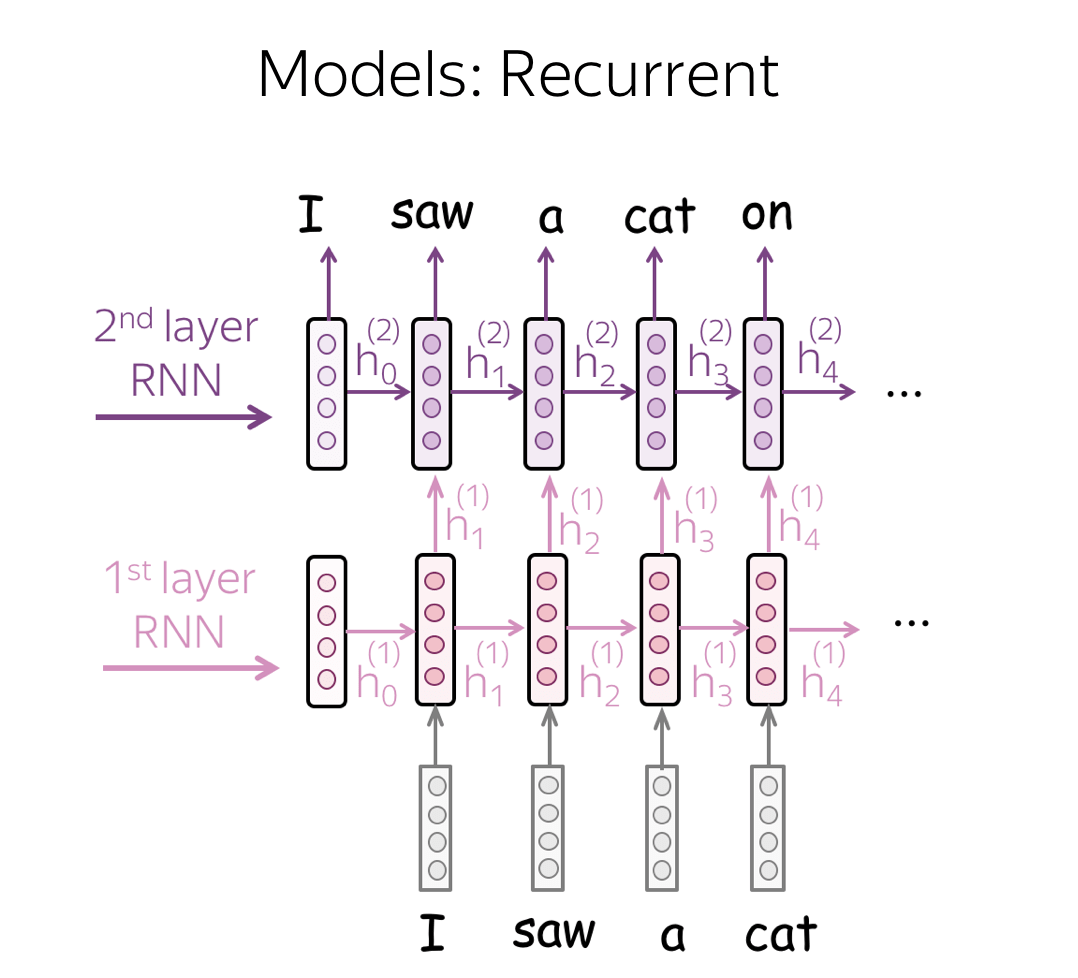

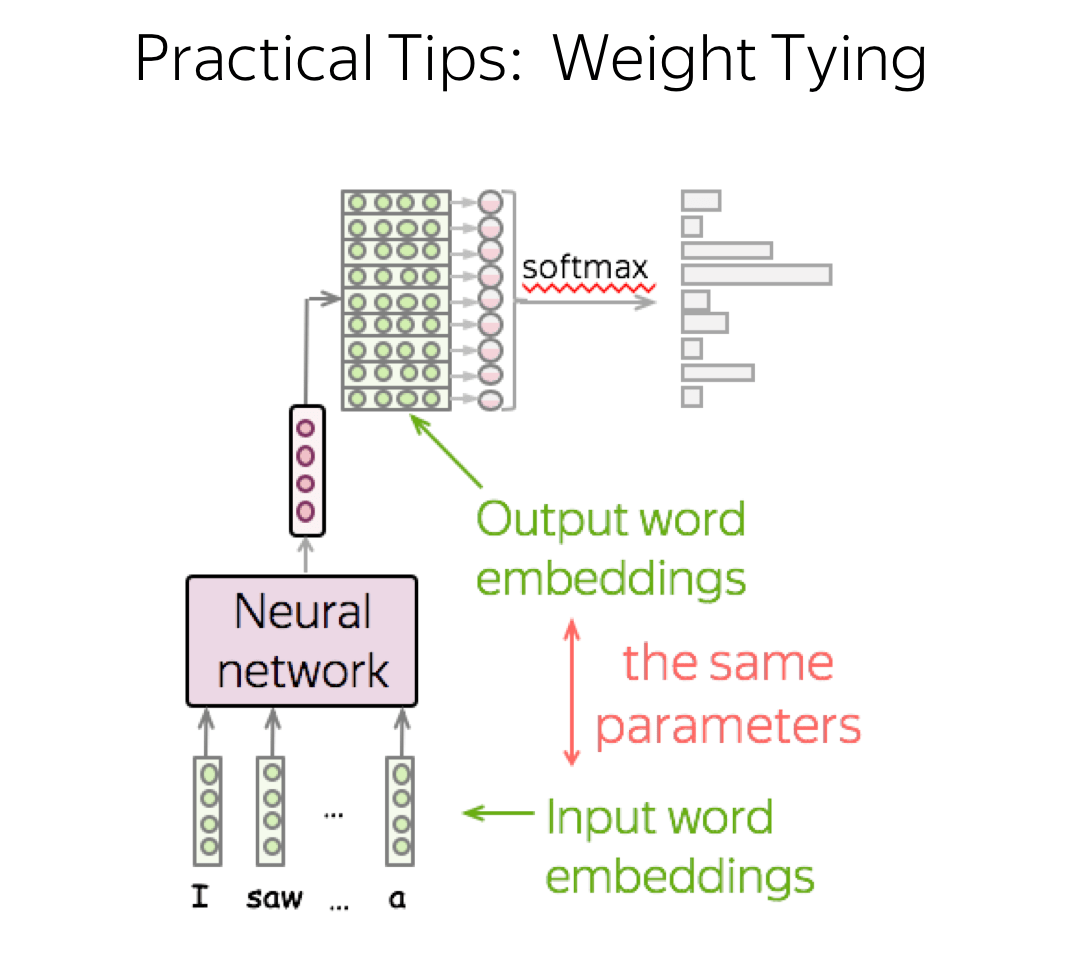

Language Modeling

- General Framework

- N-Gram LMs

- Neural LMs

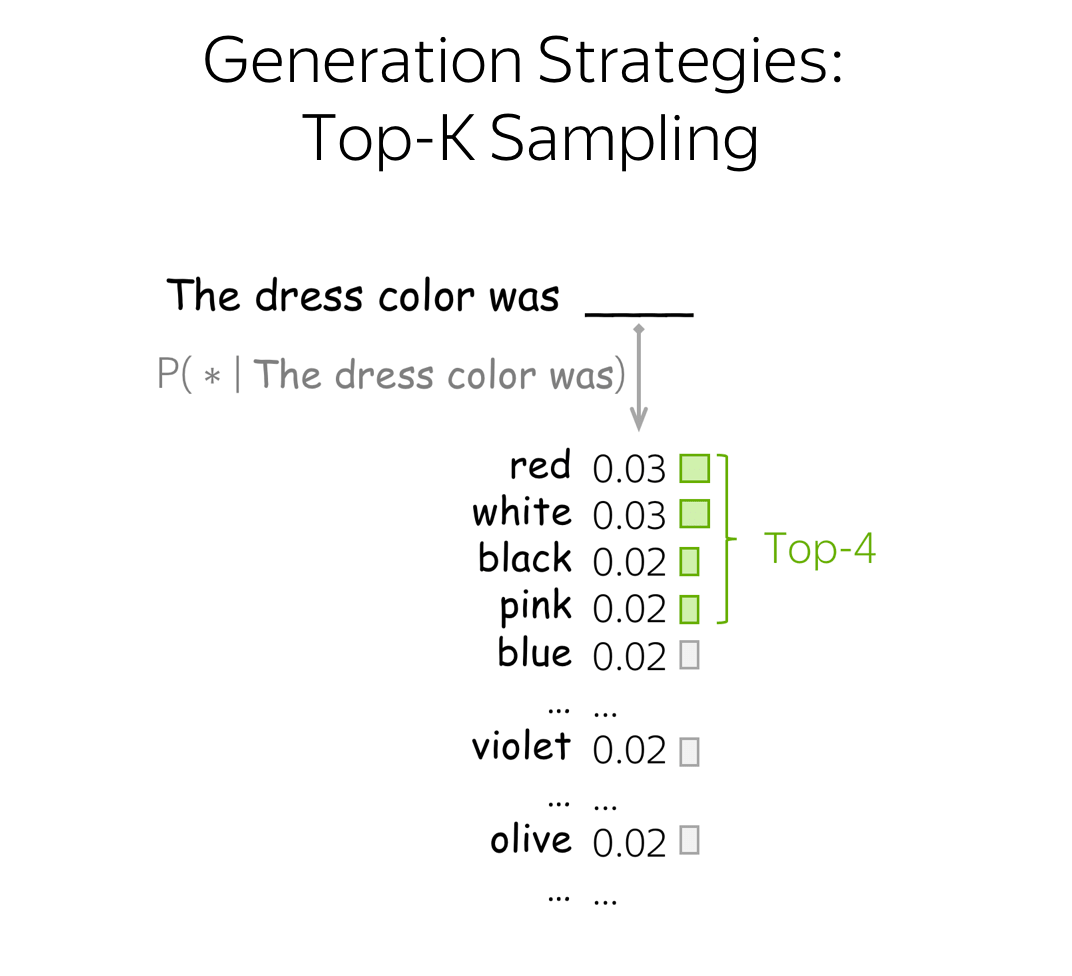

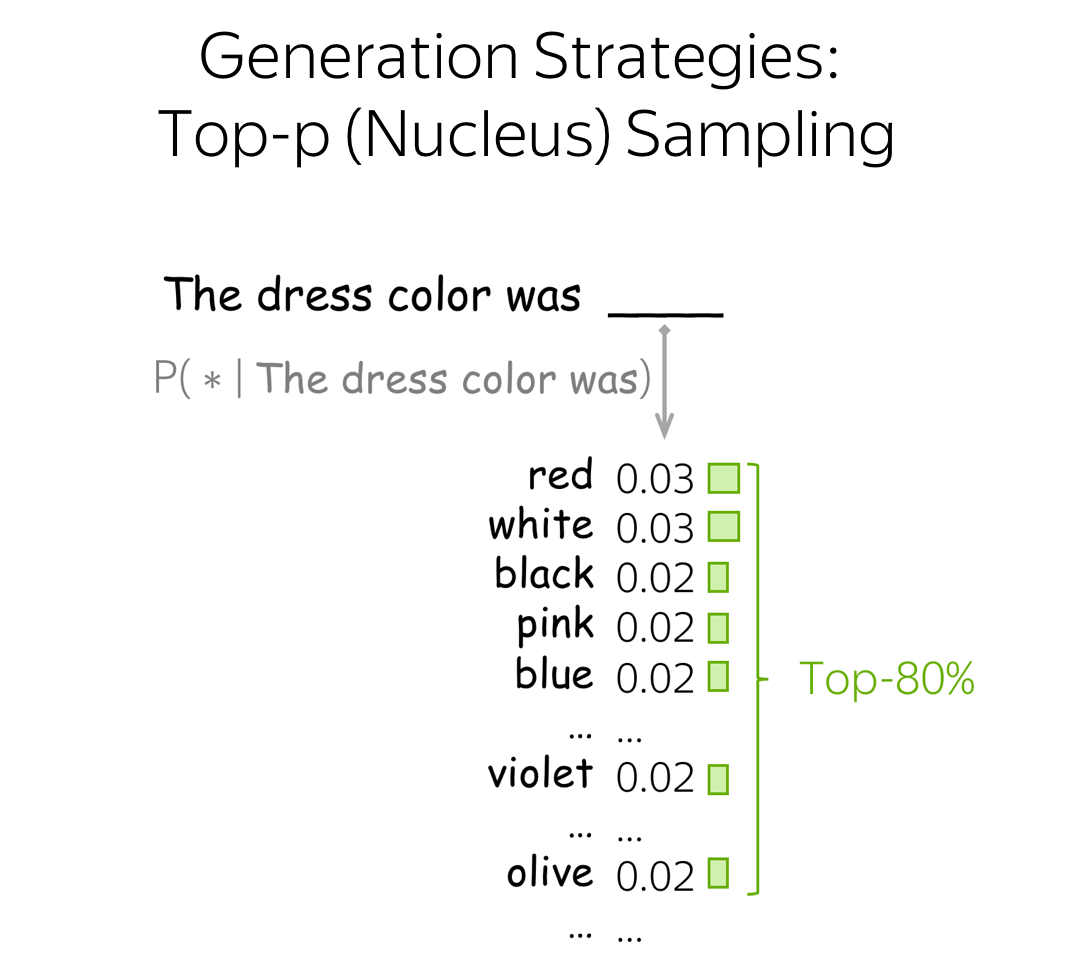

- Generation Strategies

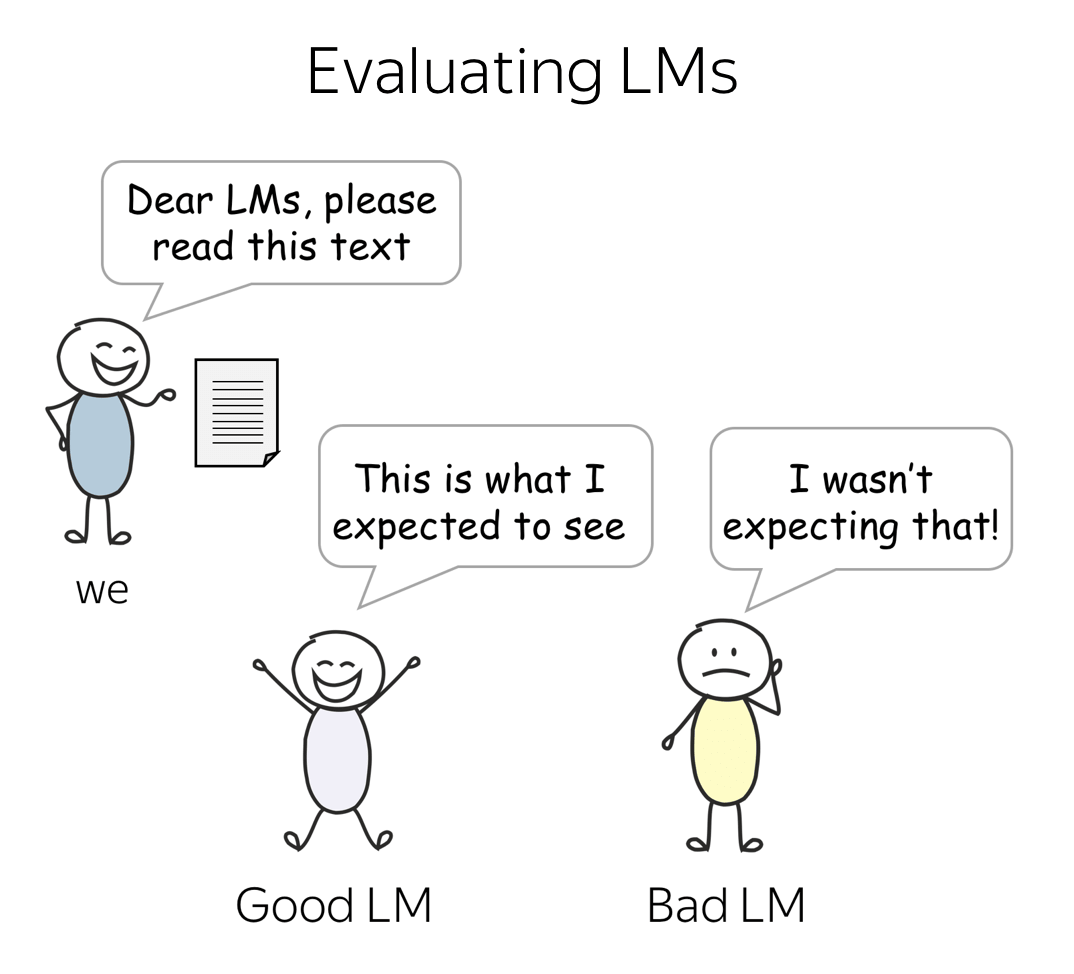

- Evaluating LMs

- Practical Tips

Analysis and Interpretability

Analysis and Interpretability- Bonus:

Seminar & Homework

Generate a Text with Ngram LMs

Neural Language Models

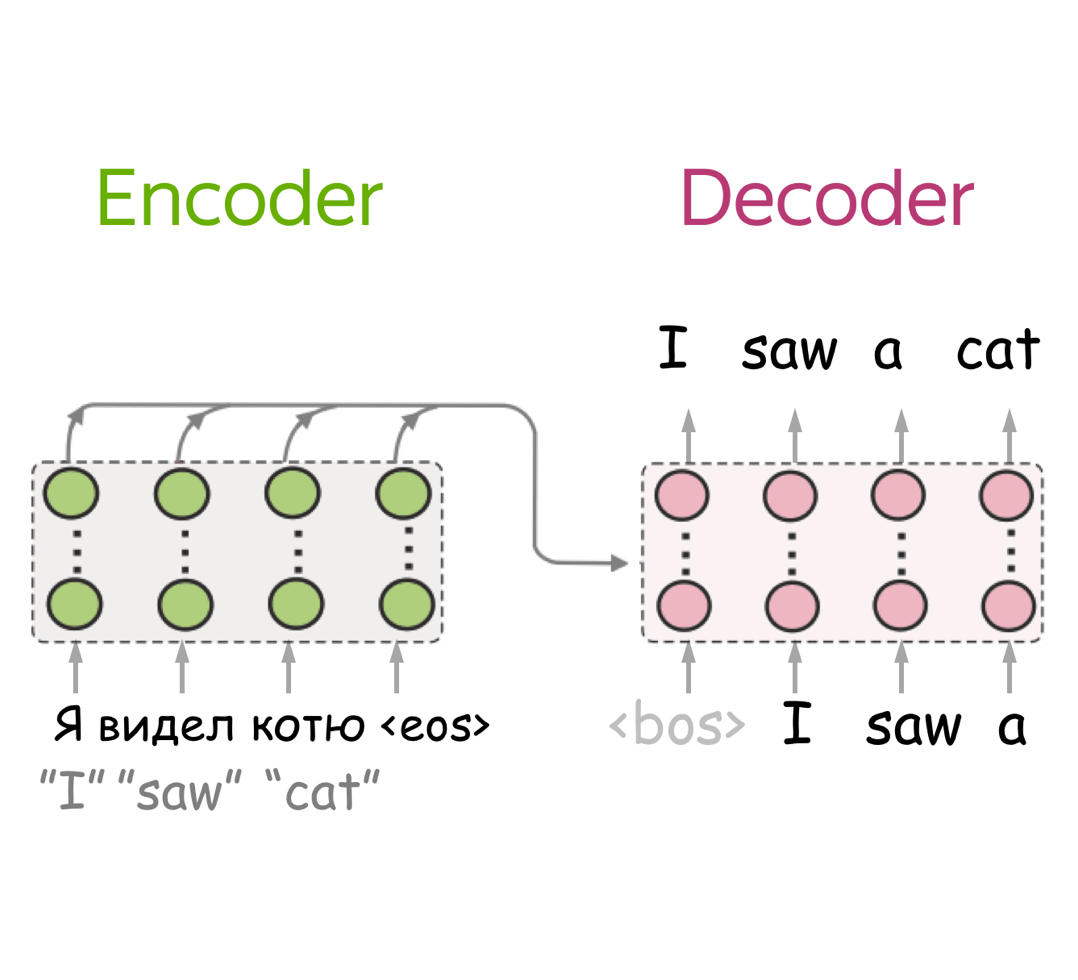

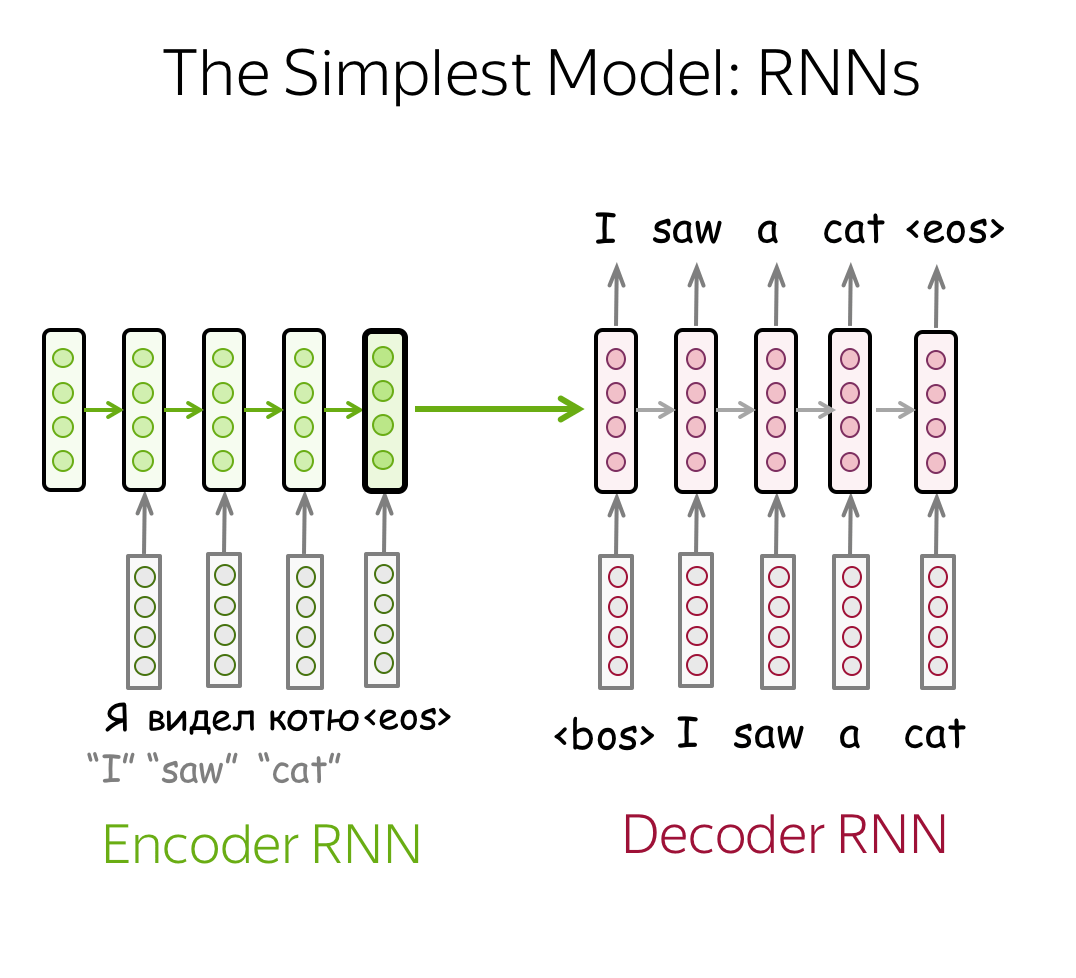

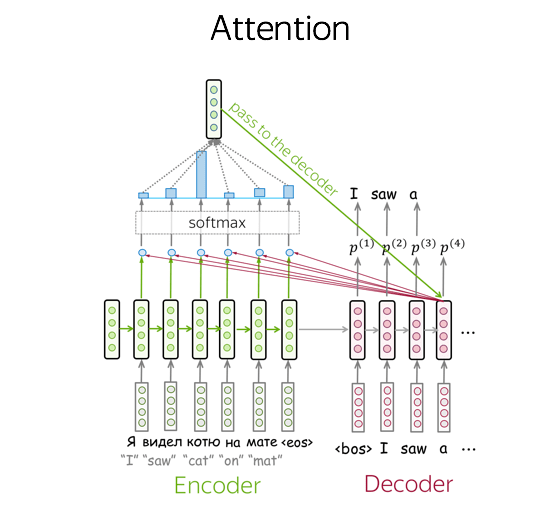

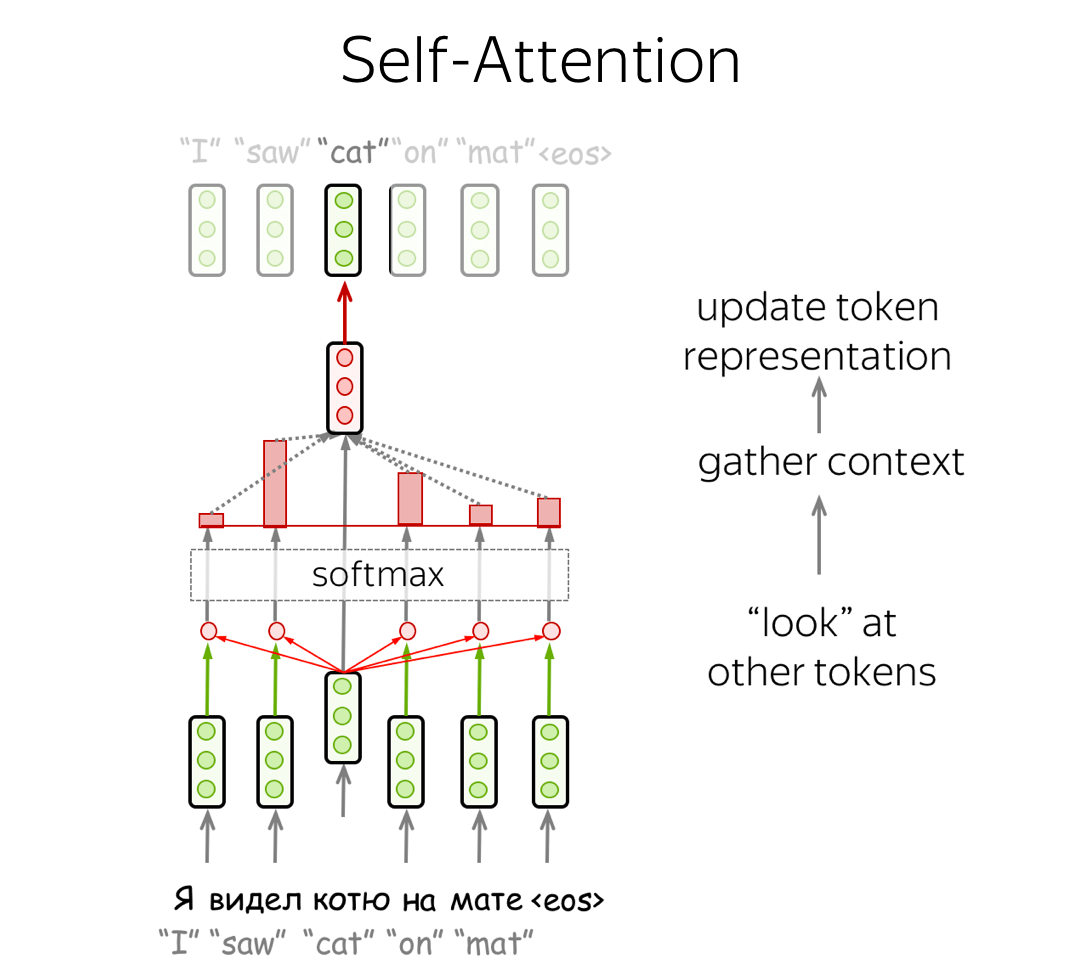

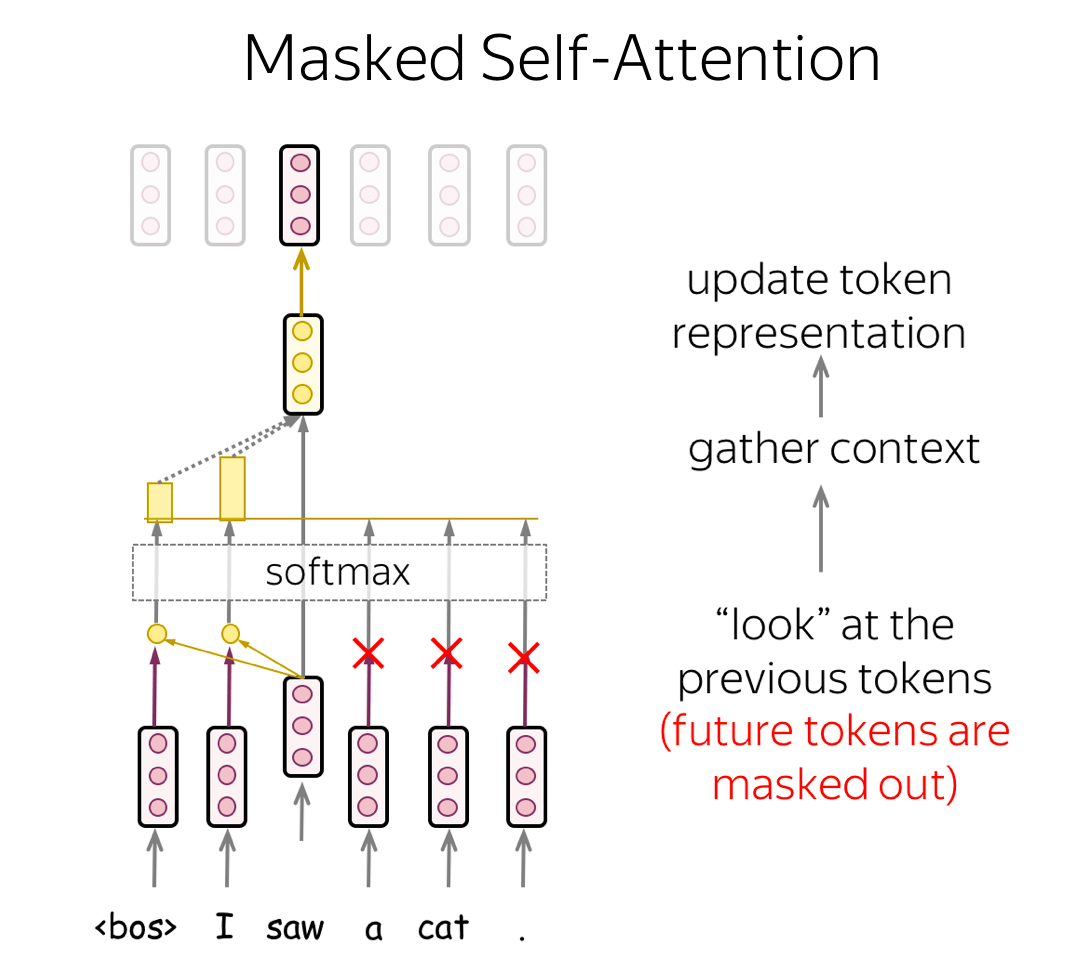

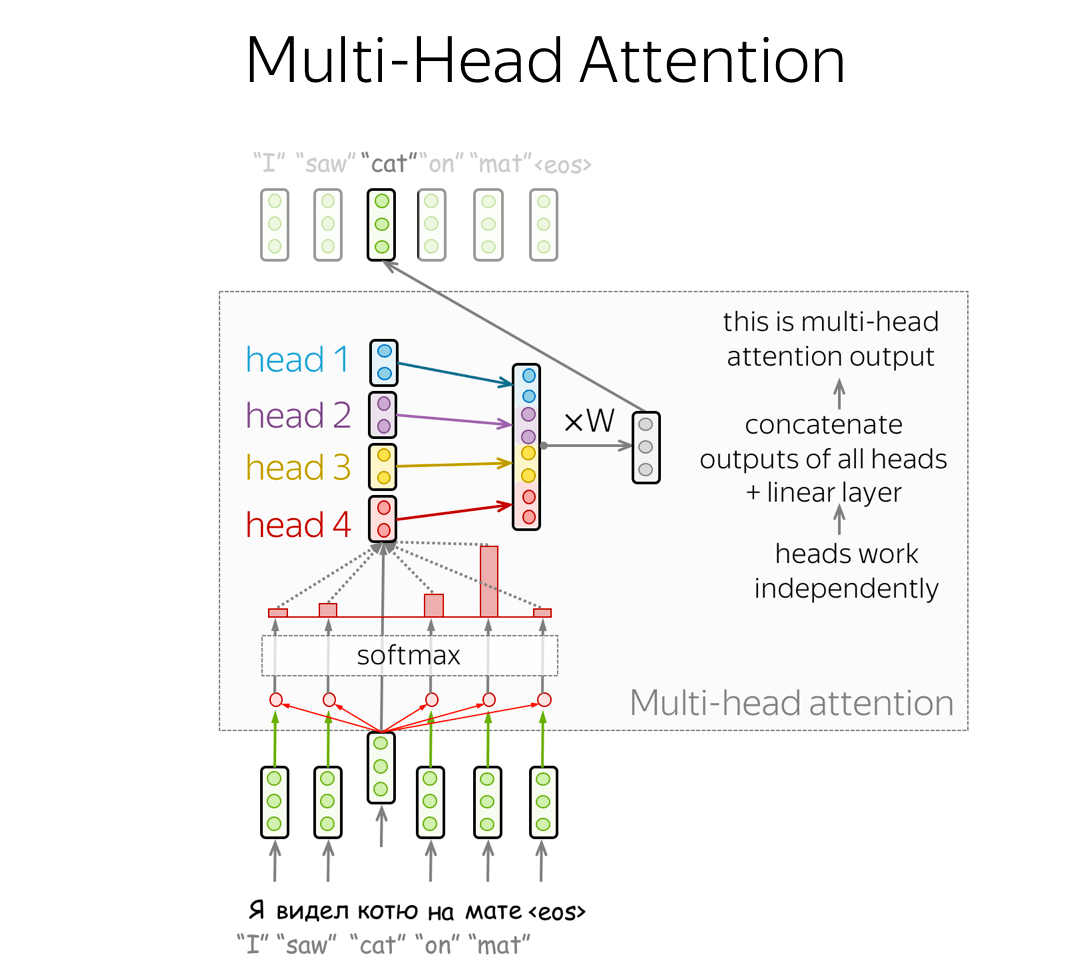

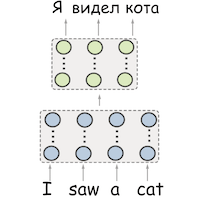

Seq2seq and Attention

- Seq2seq Basics (Encoder-Decoder, Training, Simple Models)

- Attention

- Transformer

- Subword Segmentation (e.g., BPE)

- Inference (e.g., beam search)

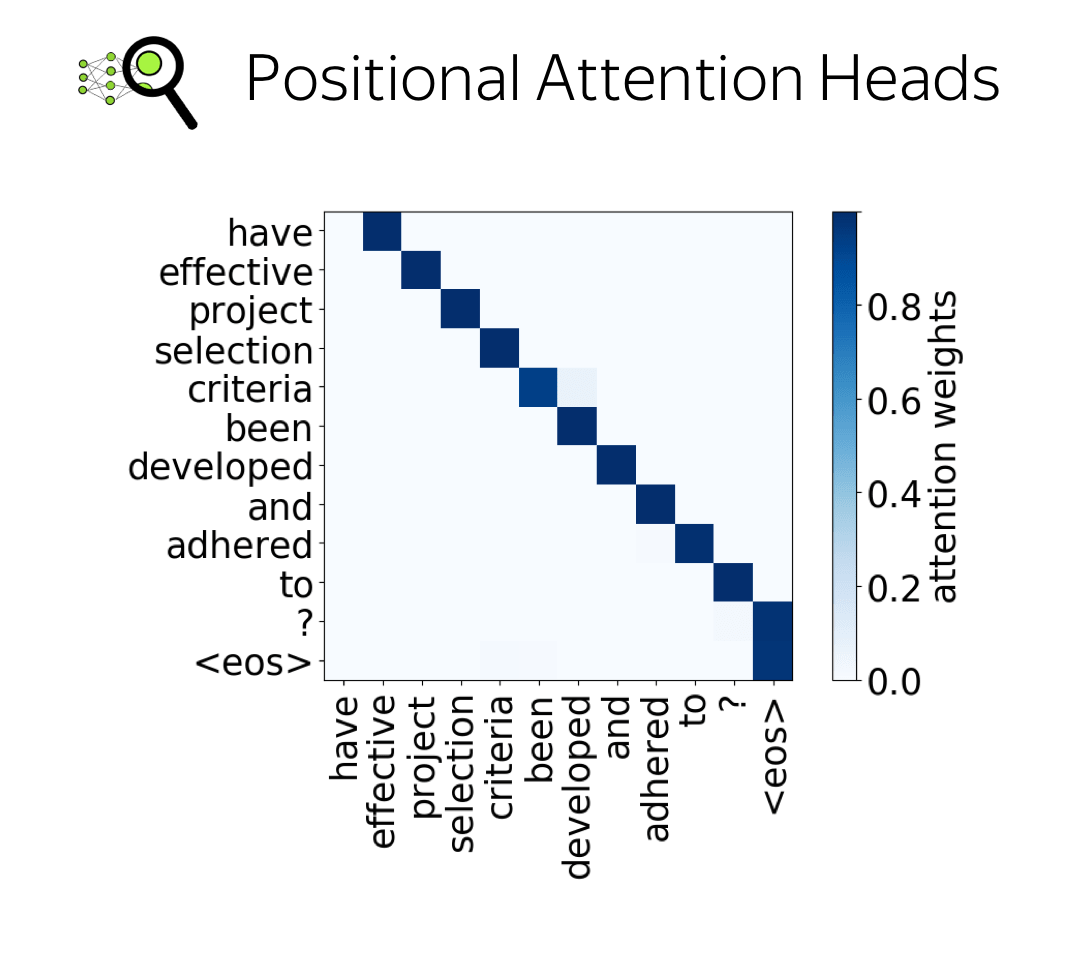

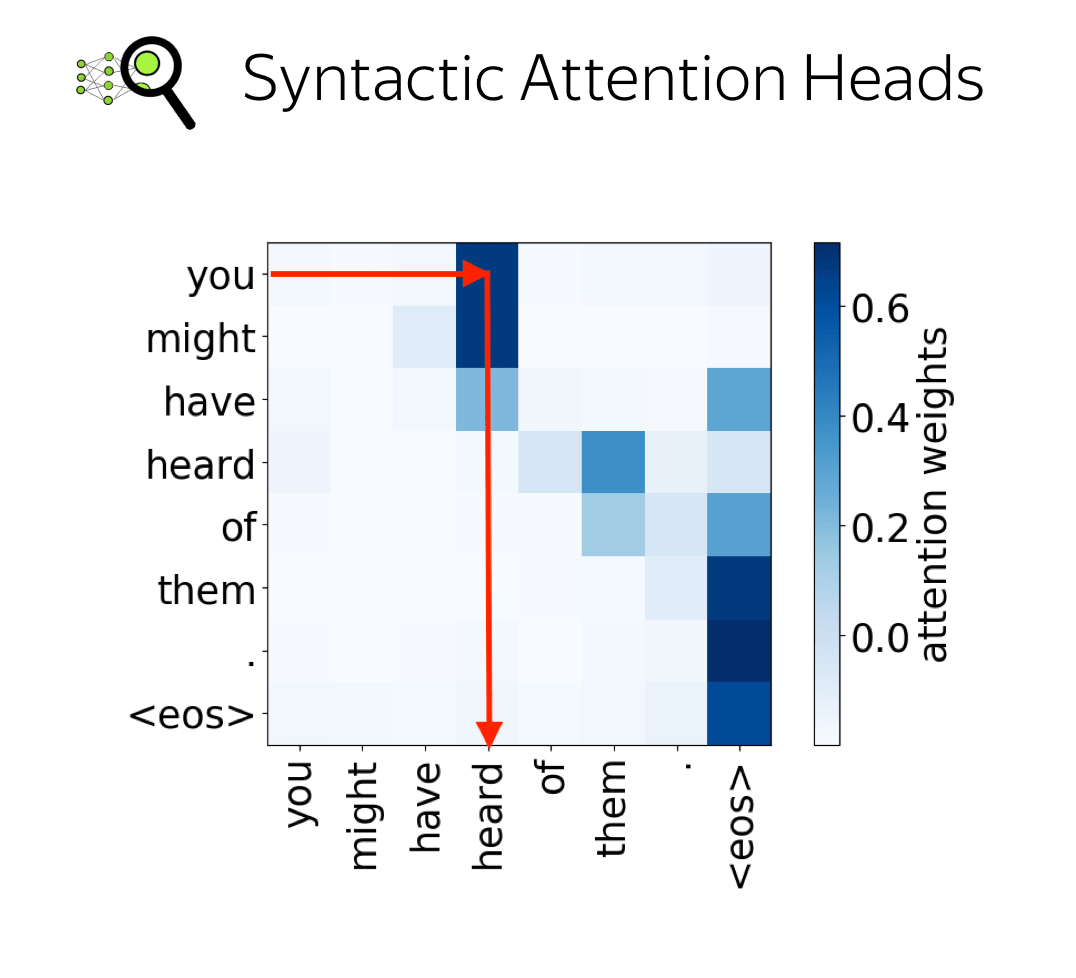

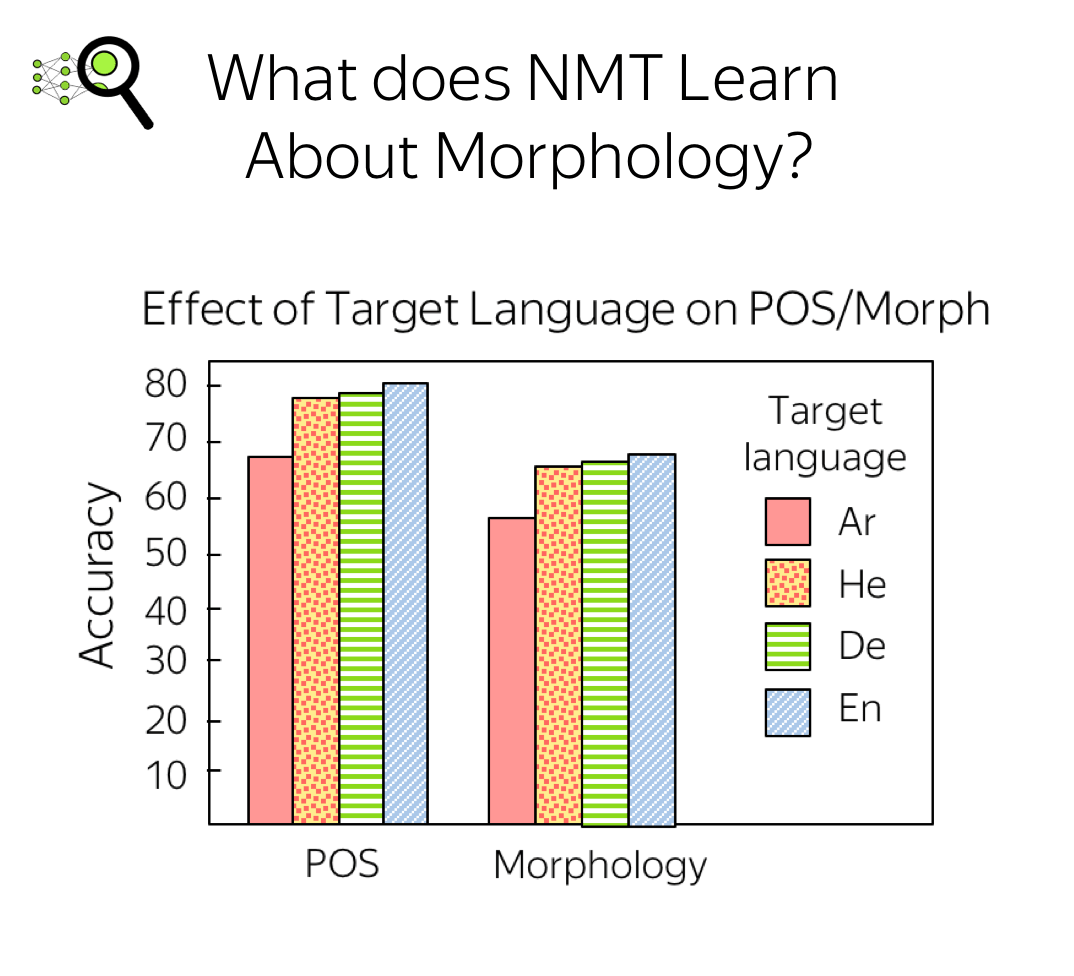

Analysis and Interpretability

Analysis and Interpretability- Bonus:

Seminar & Homework

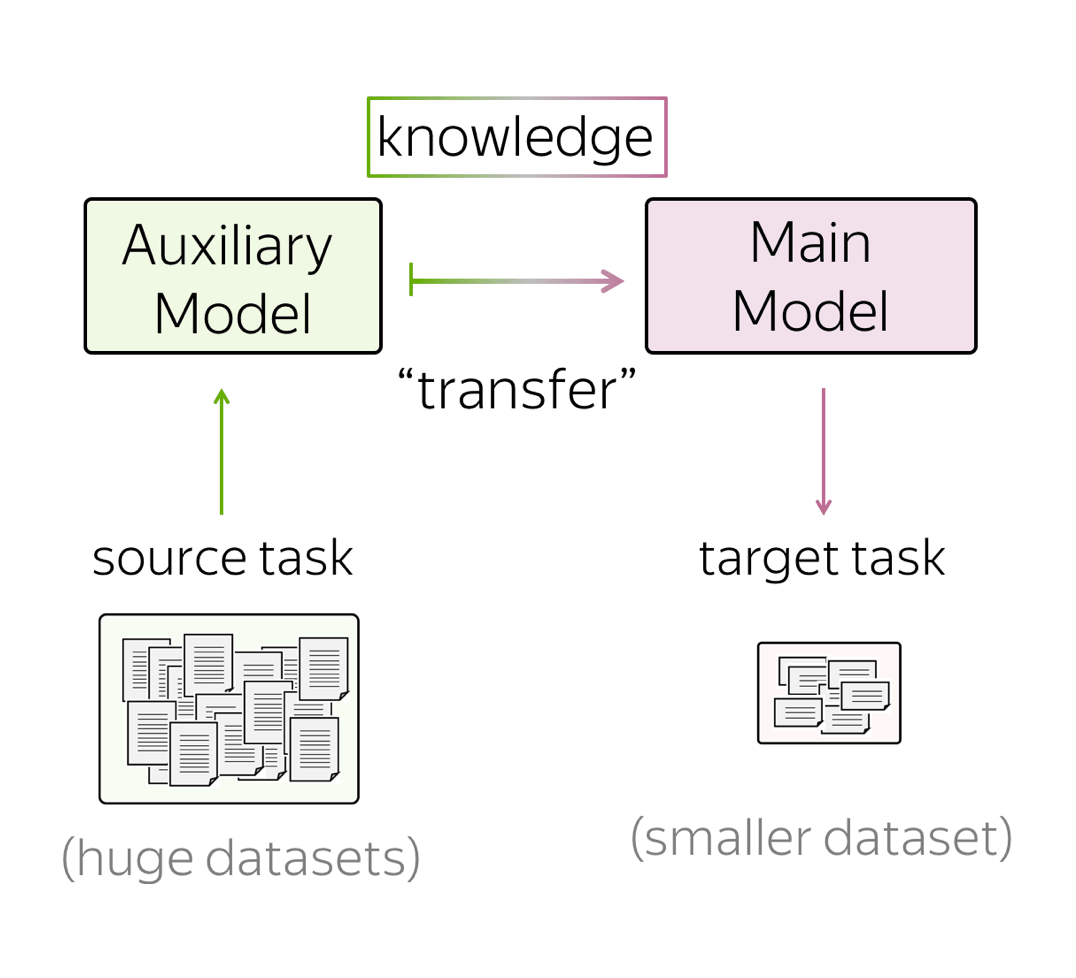

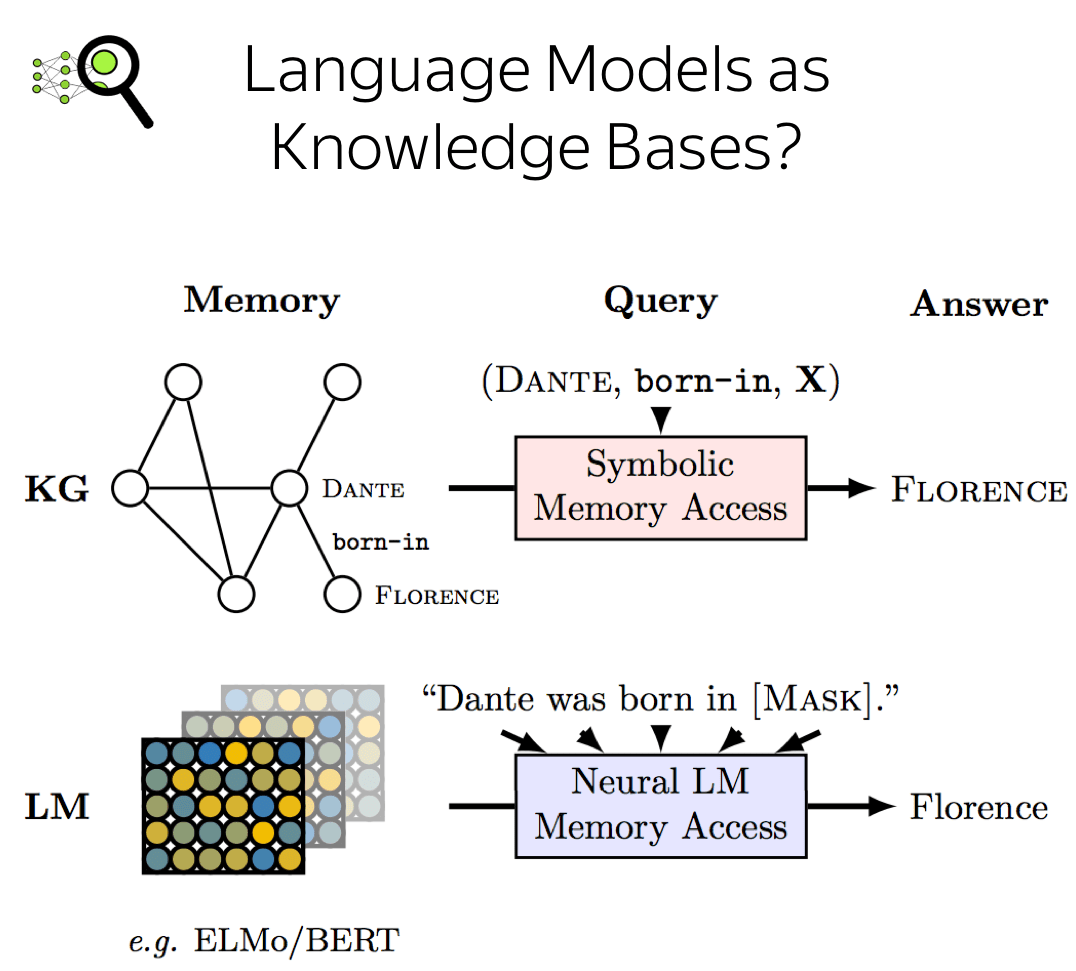

Transfer Learning

- What is Transfer Learning?

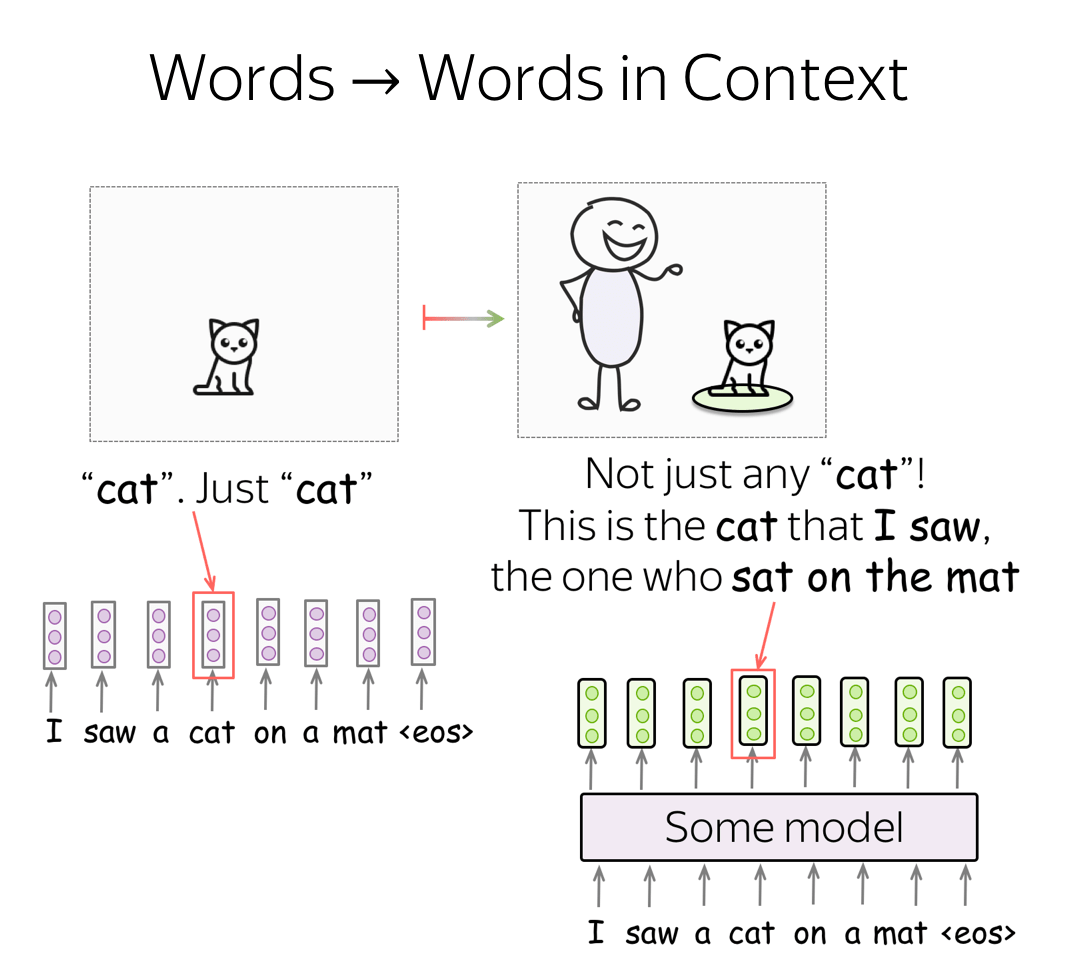

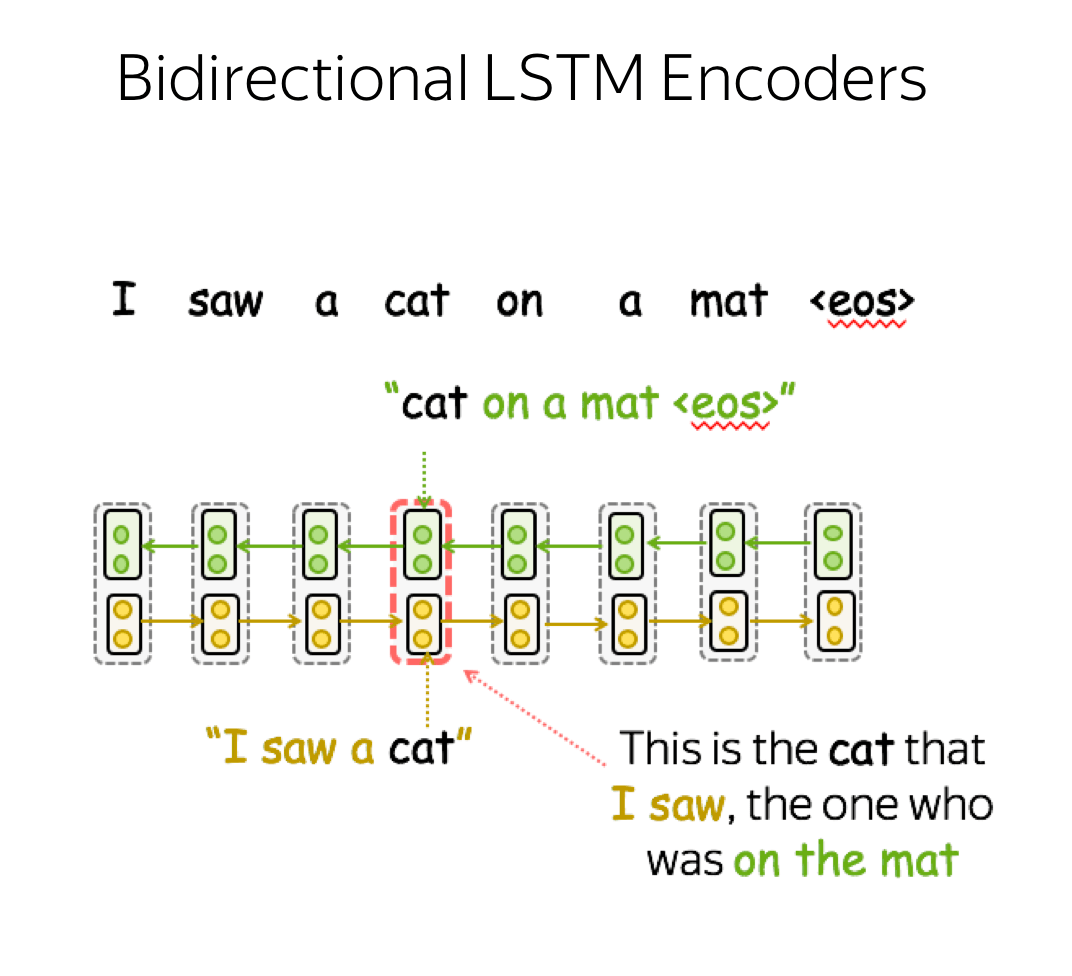

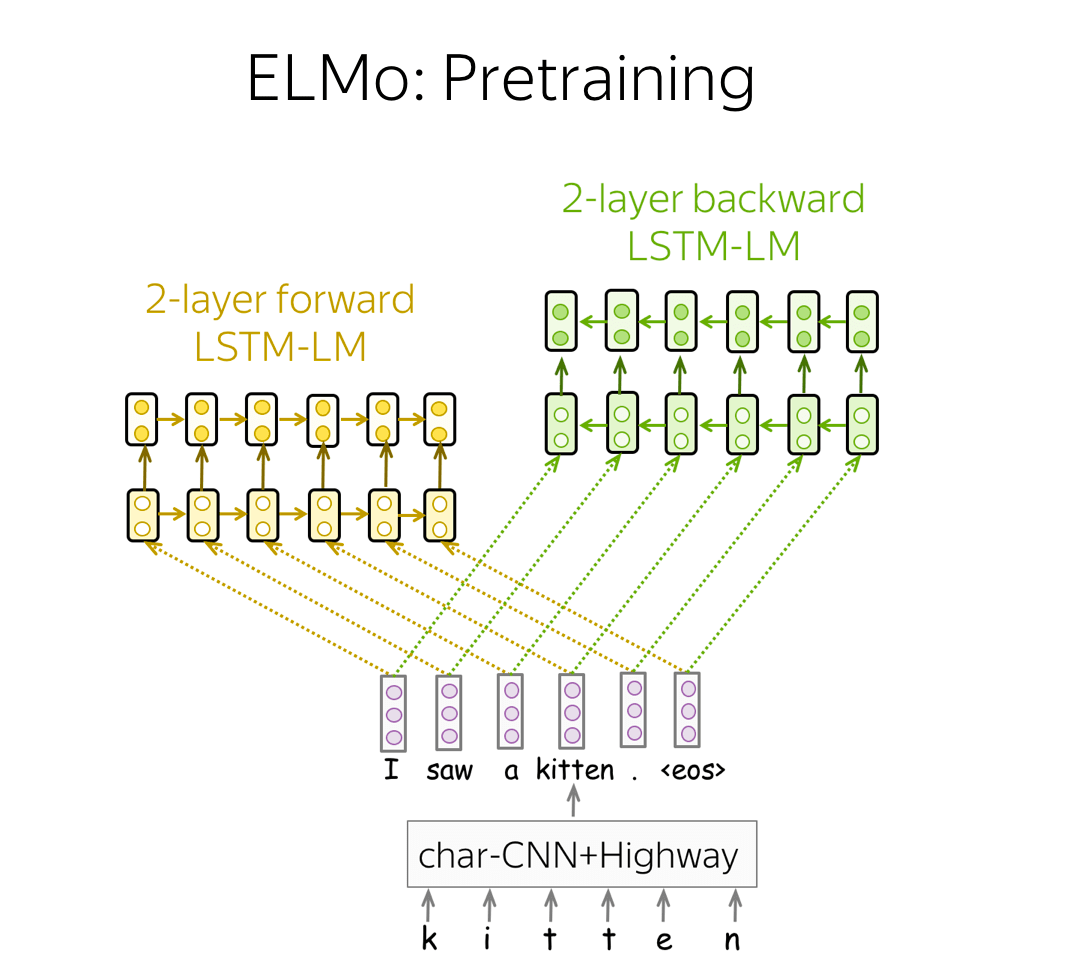

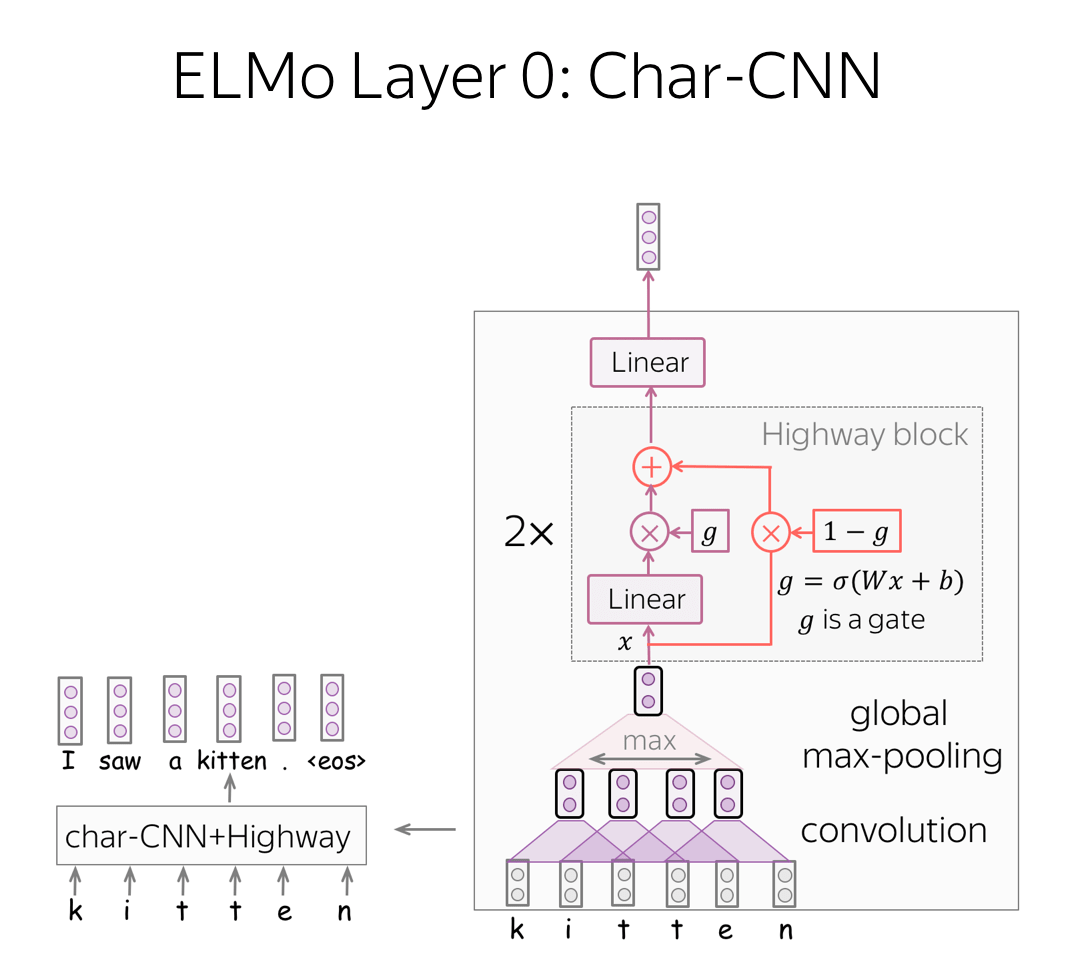

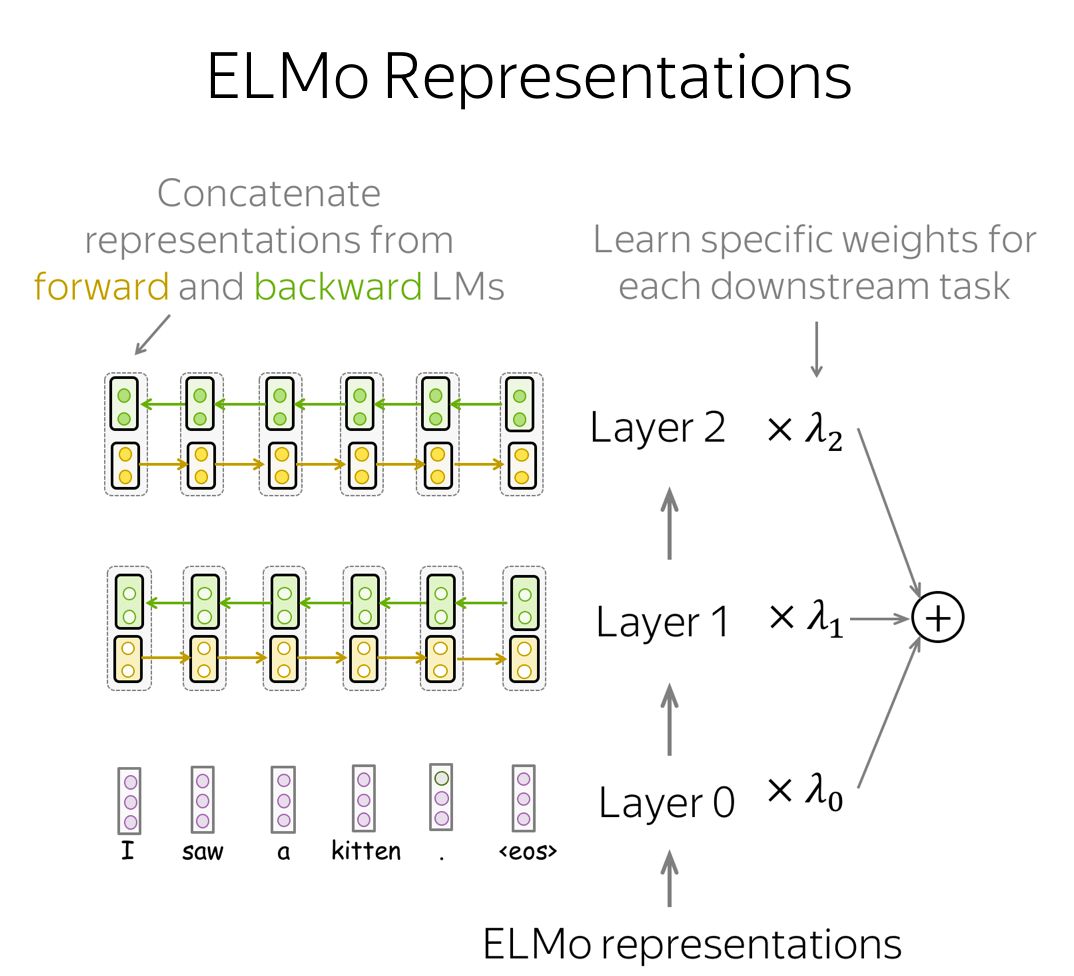

- From Words to Words-in-Context (CoVe, ELMo)

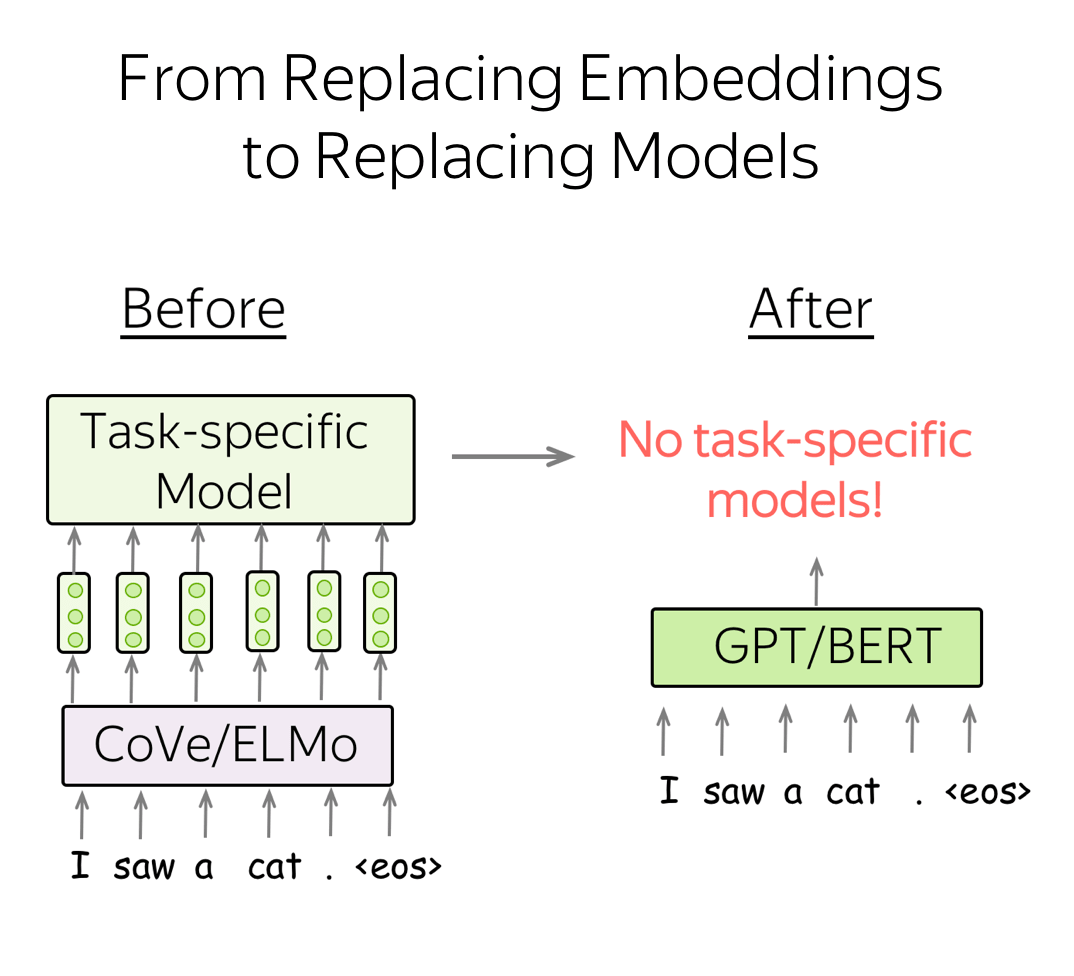

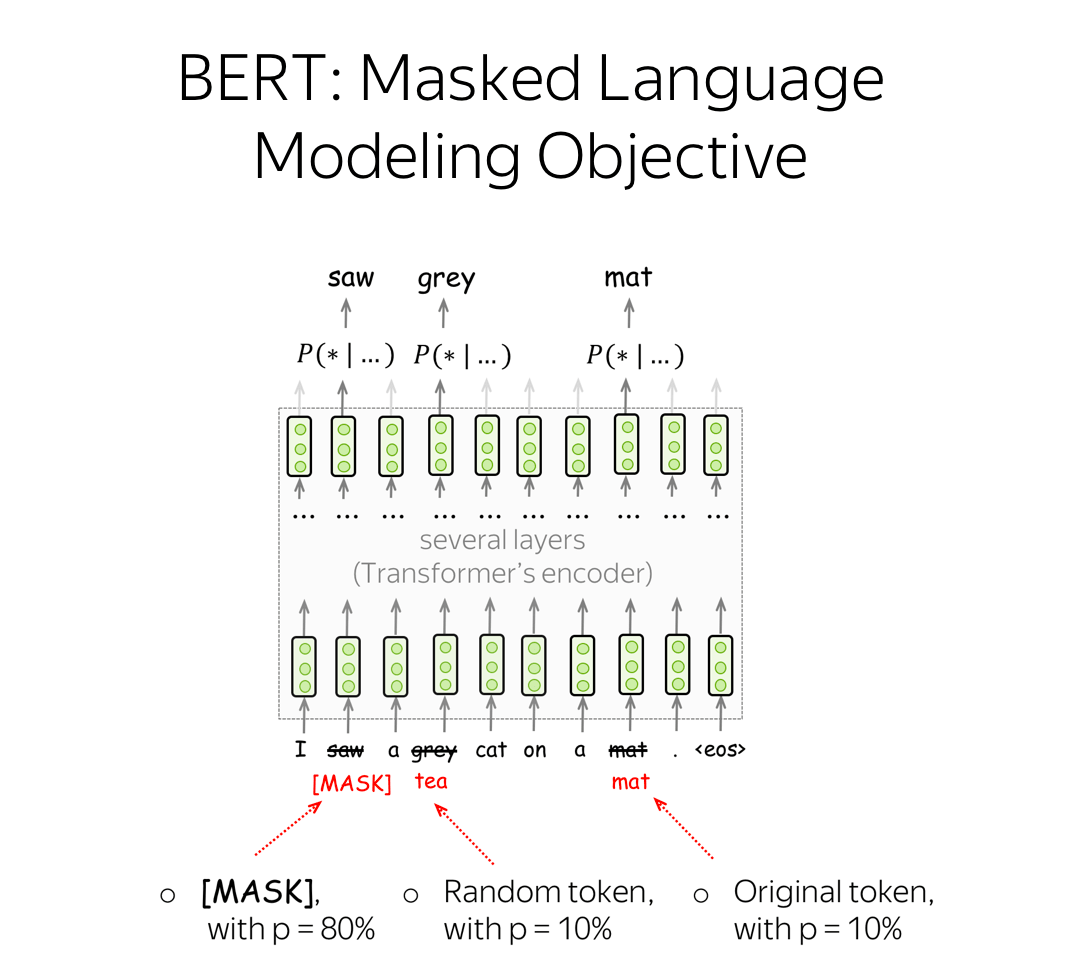

- From Replacing Embeddings to Replacing Models (GPT, BERT)

- (A Bit of) Adaptors

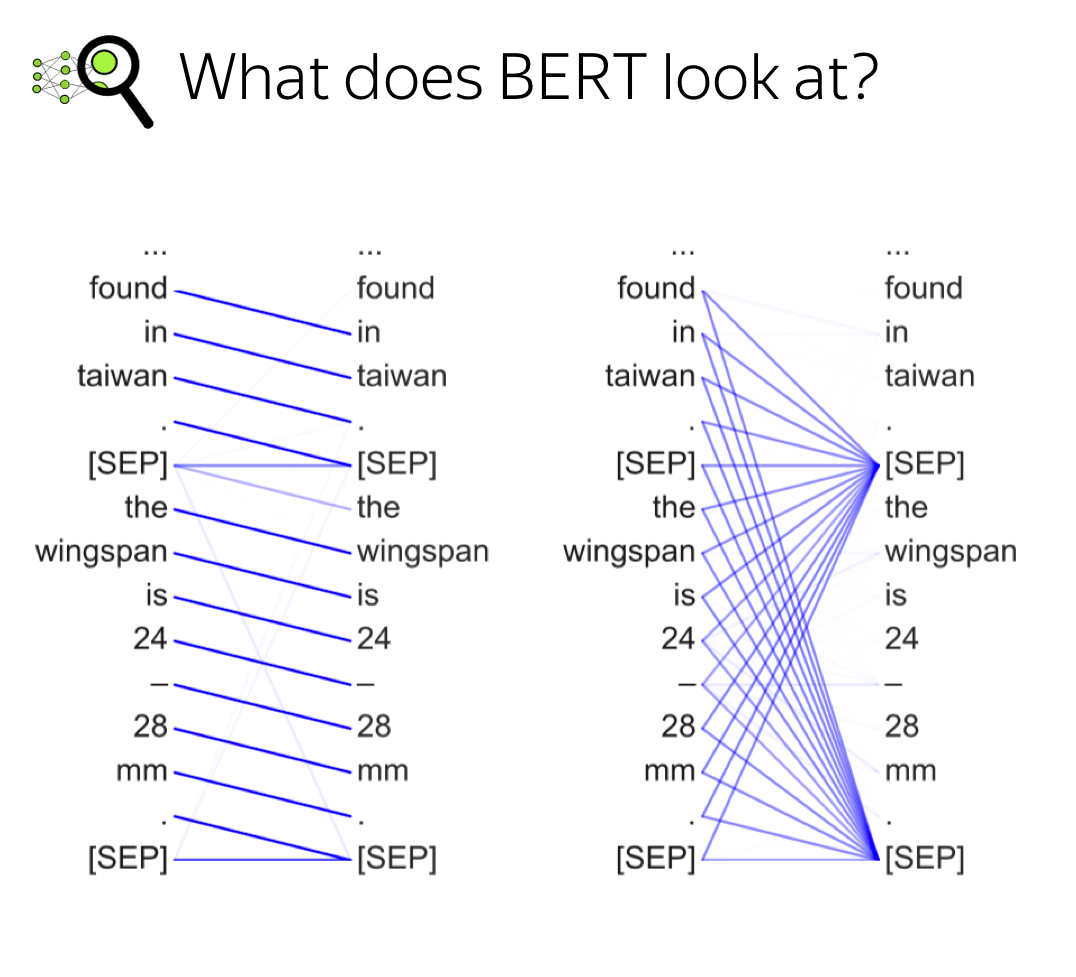

Analysis and Interpretability

Analysis and Interpretability

Seminar & Homework

Weeks 5 and 6 in the course repo.

Supplementary

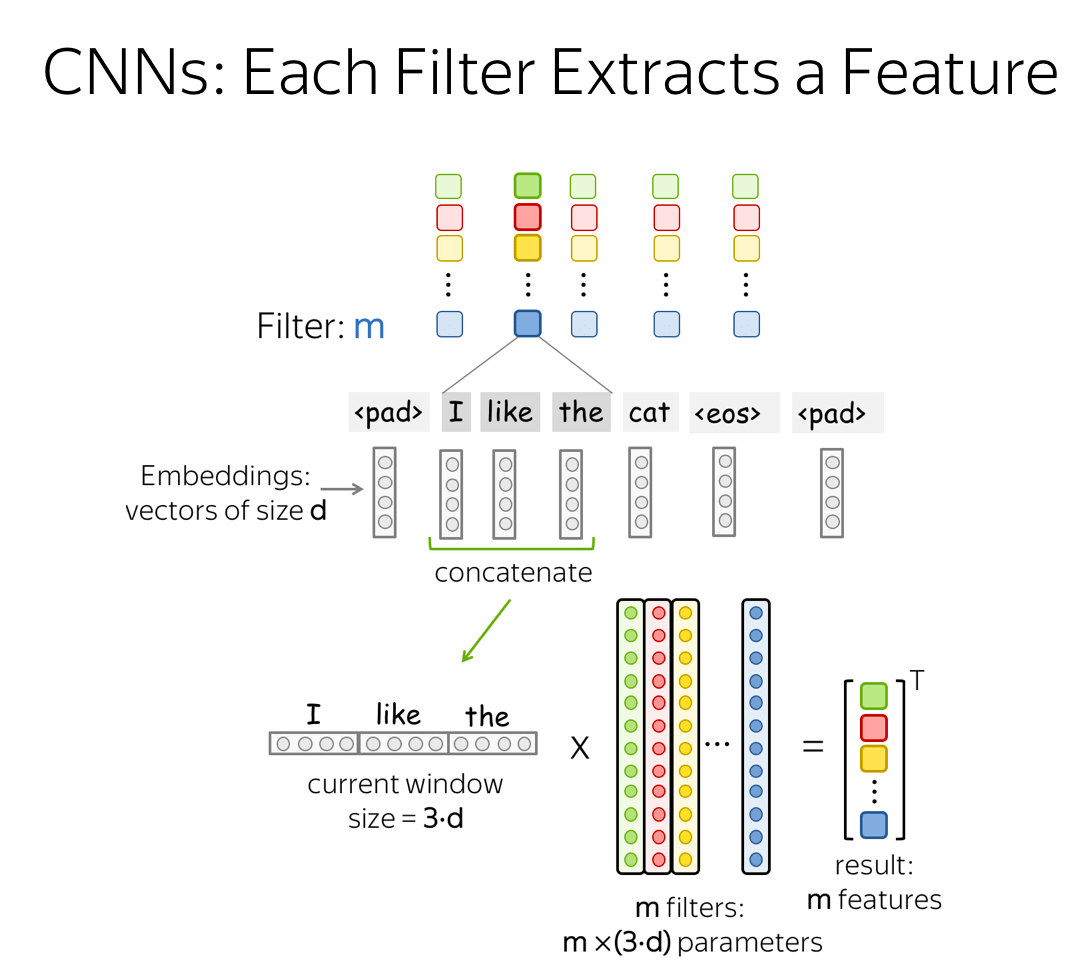

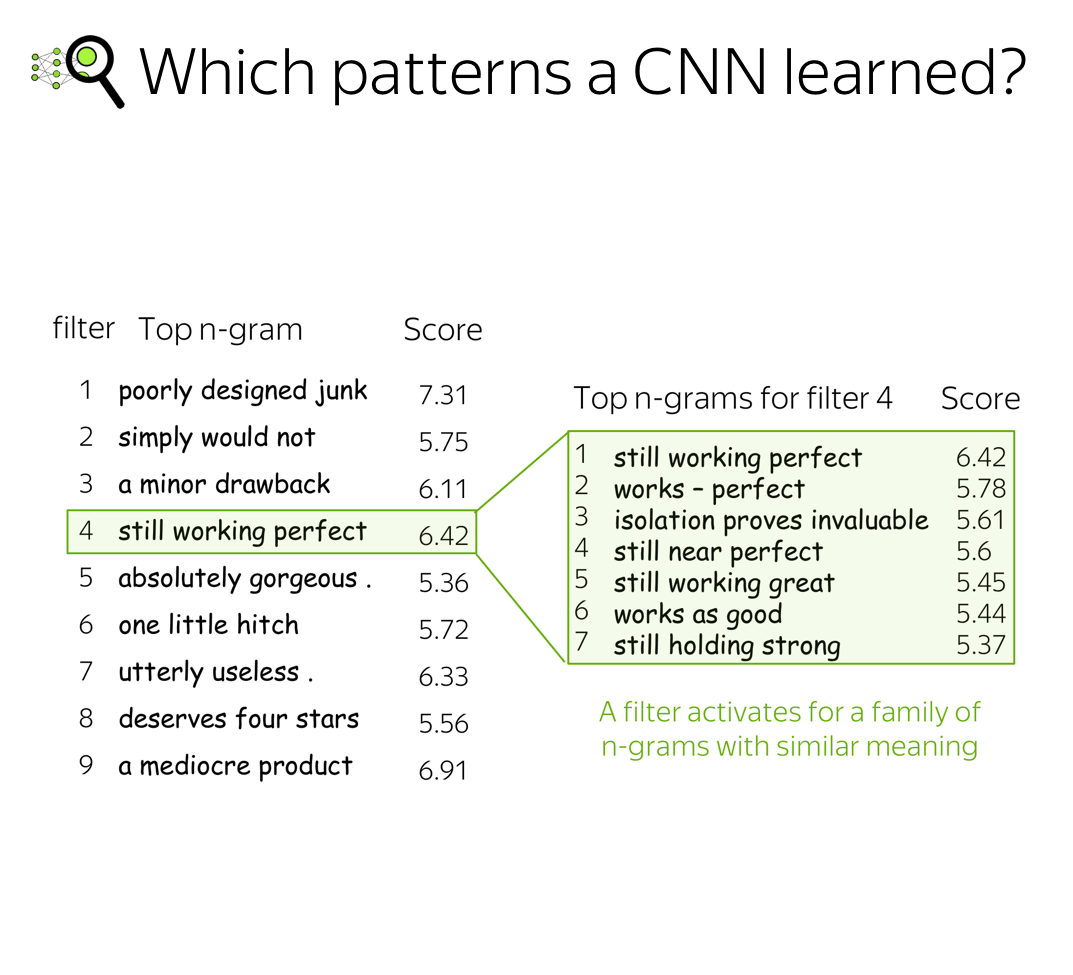

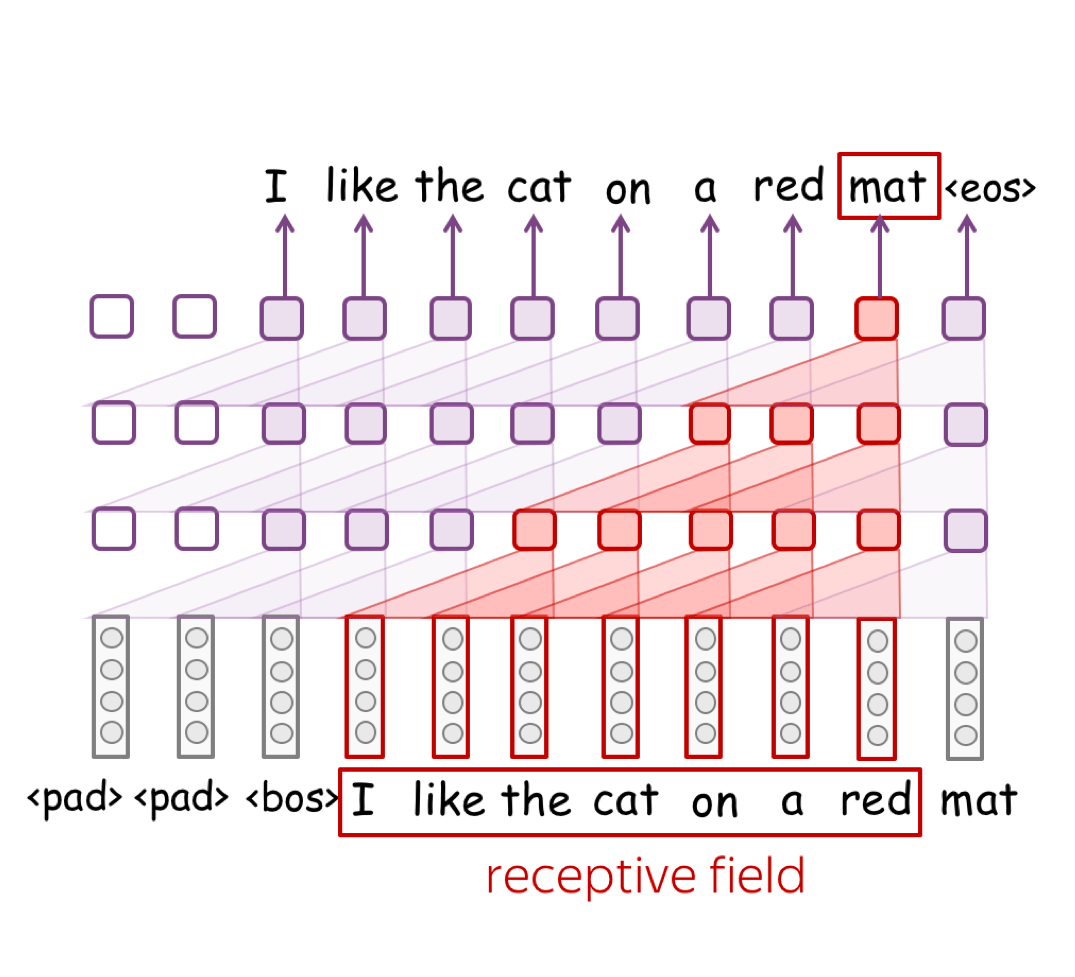

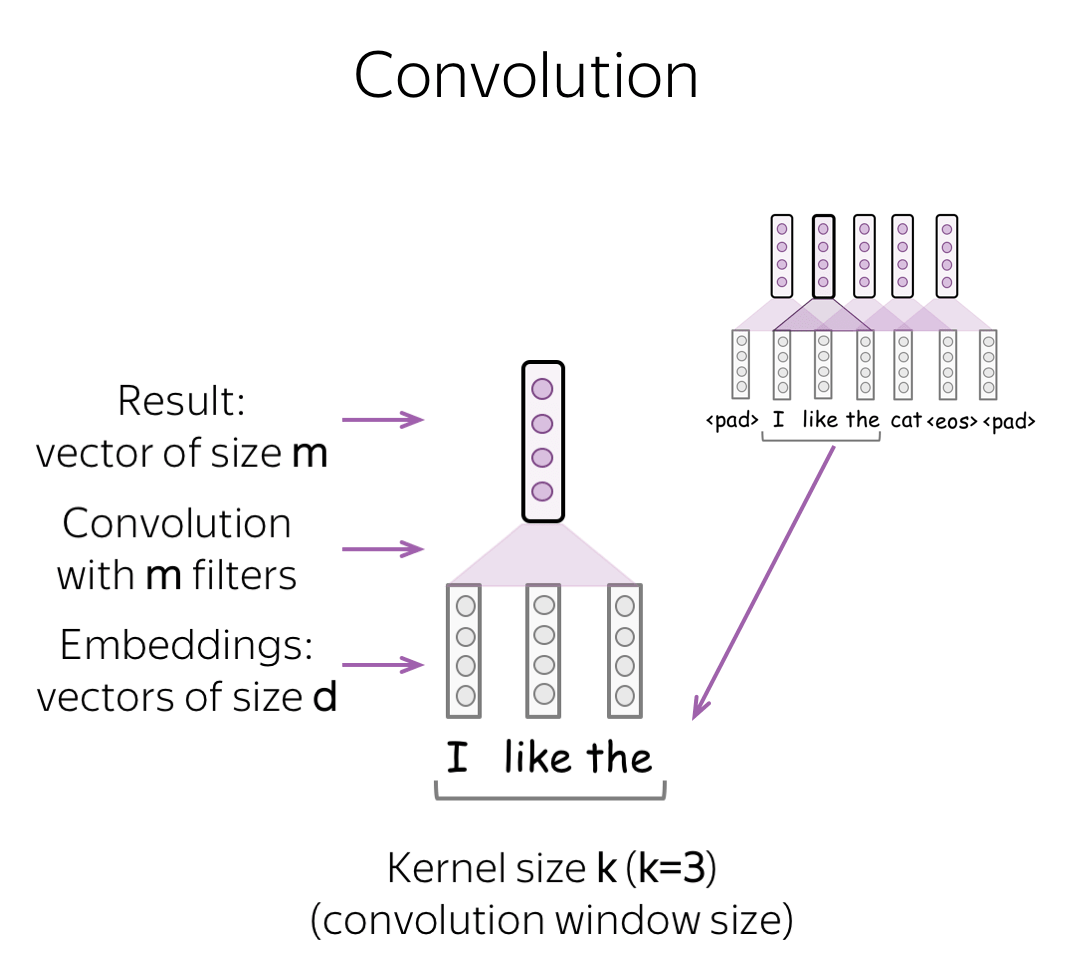

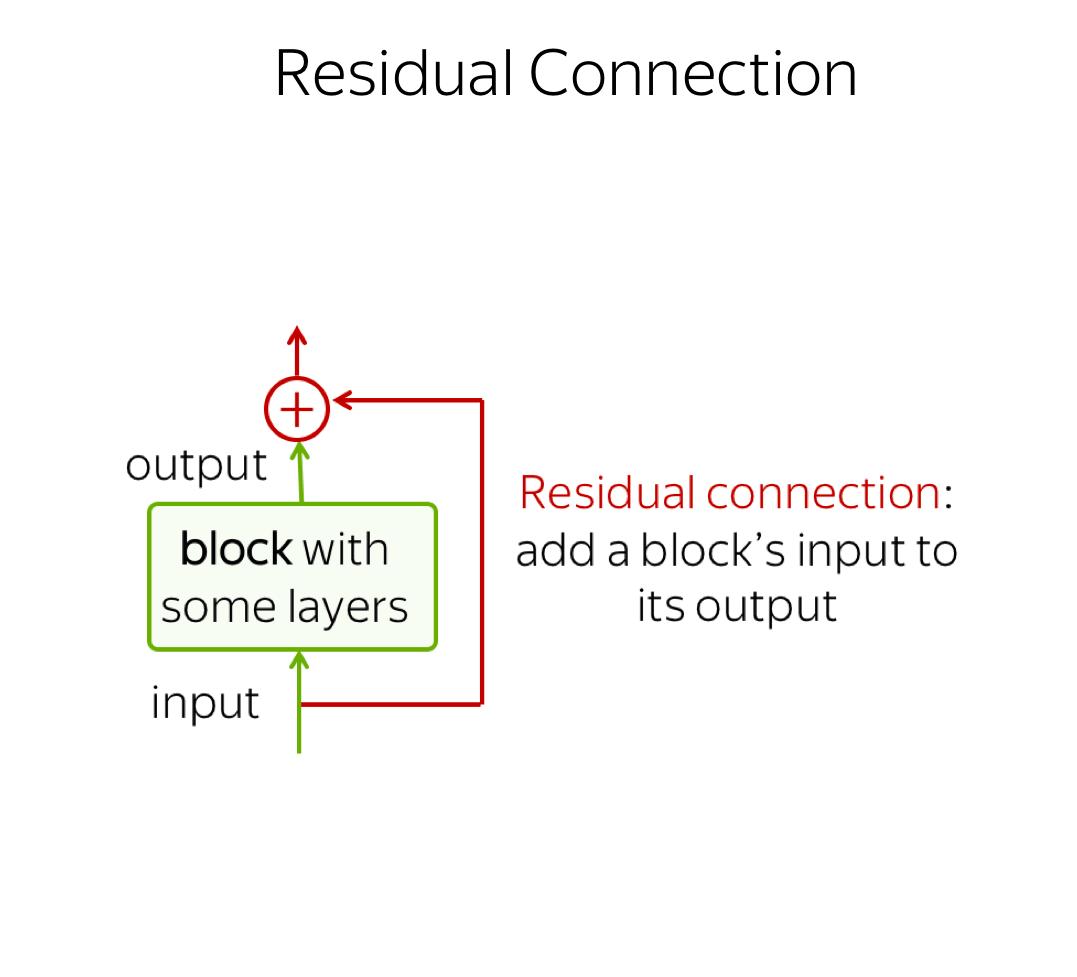

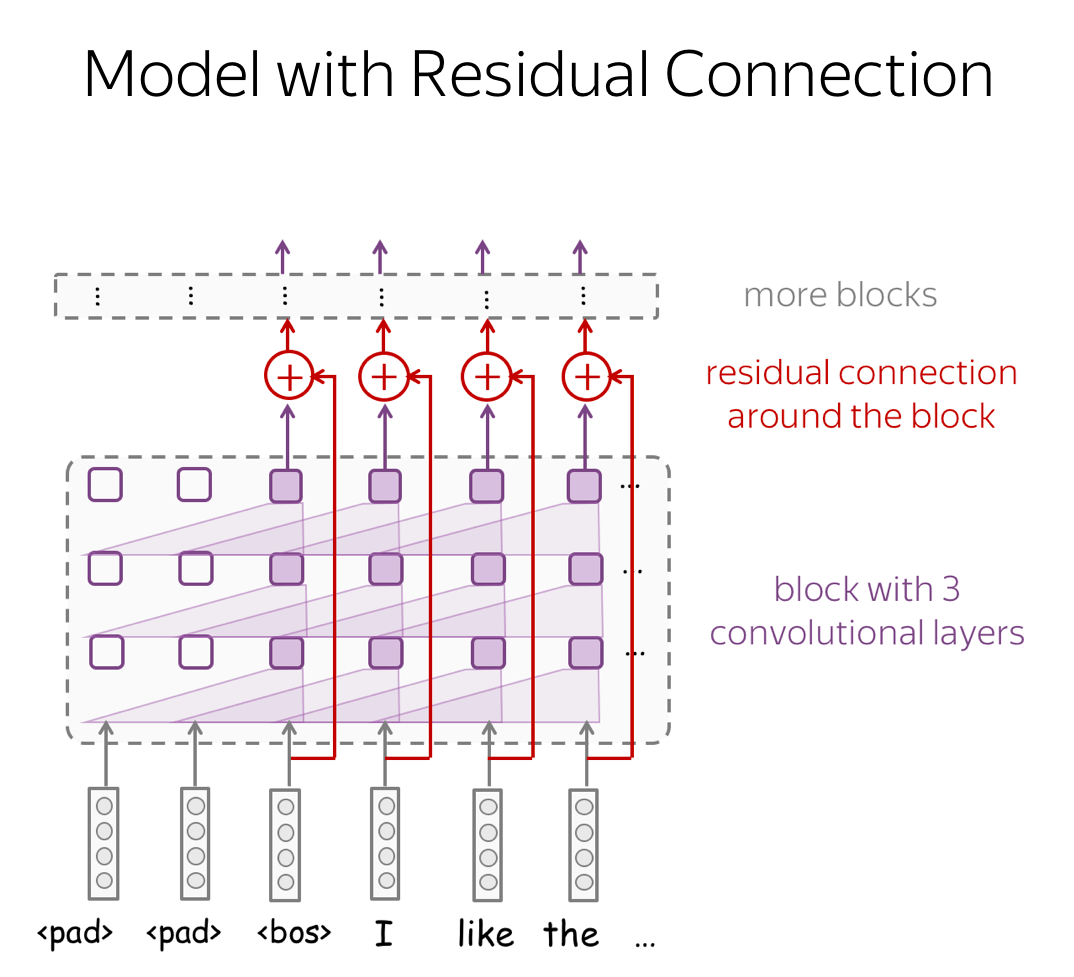

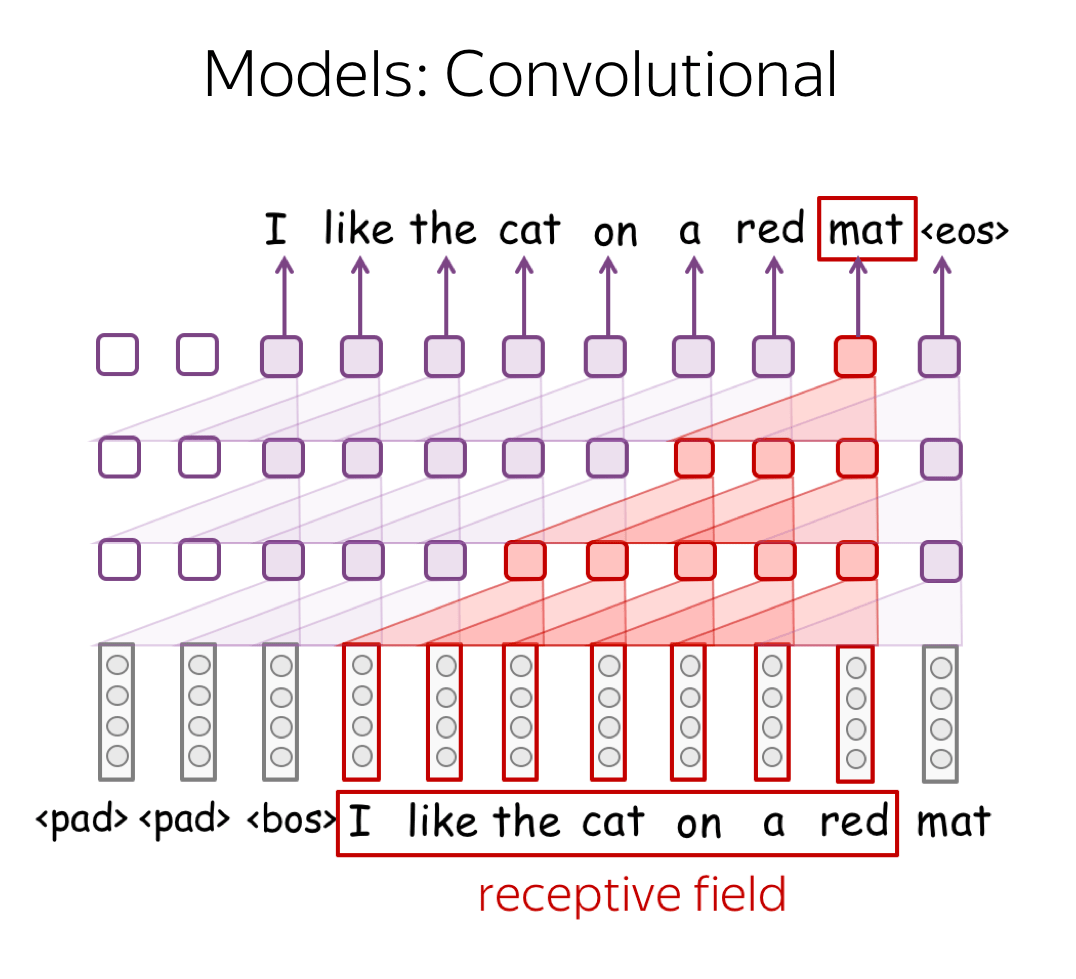

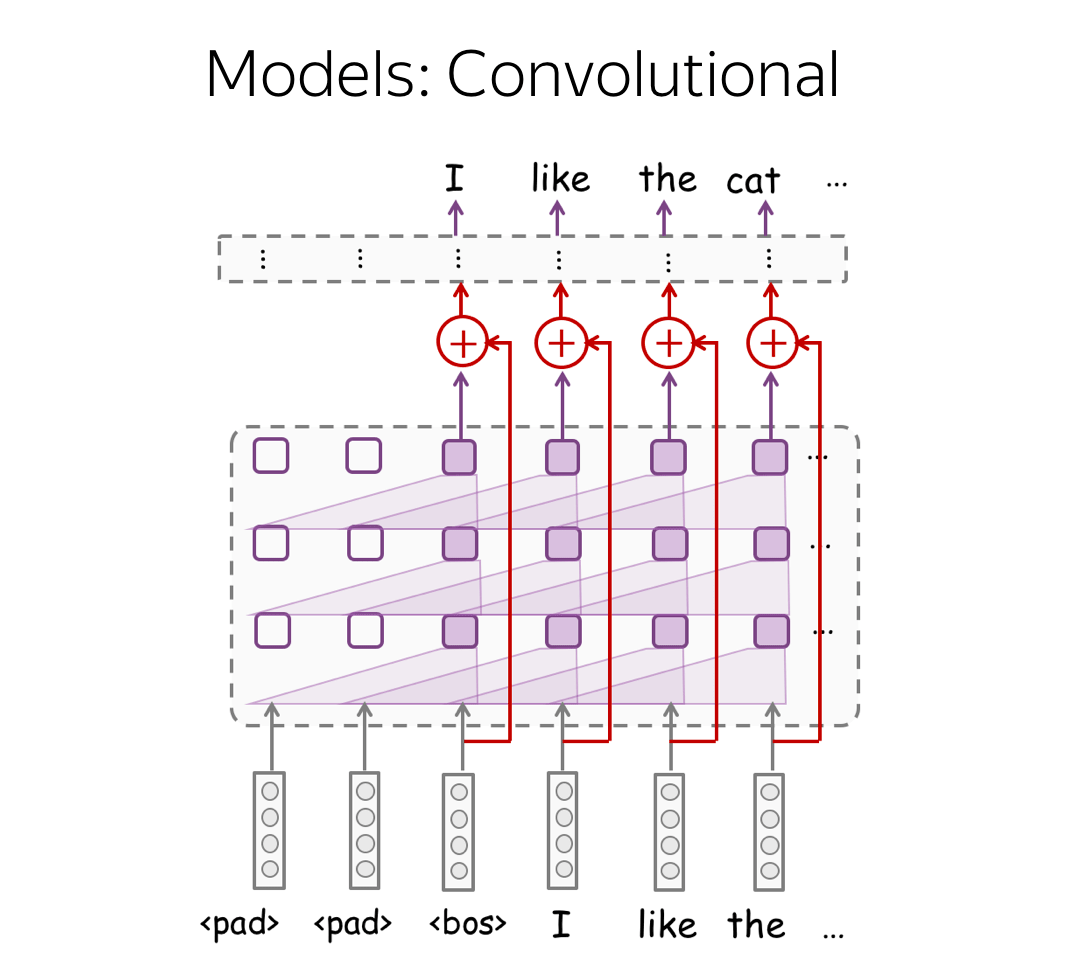

Convolutional Networks

- Intuition

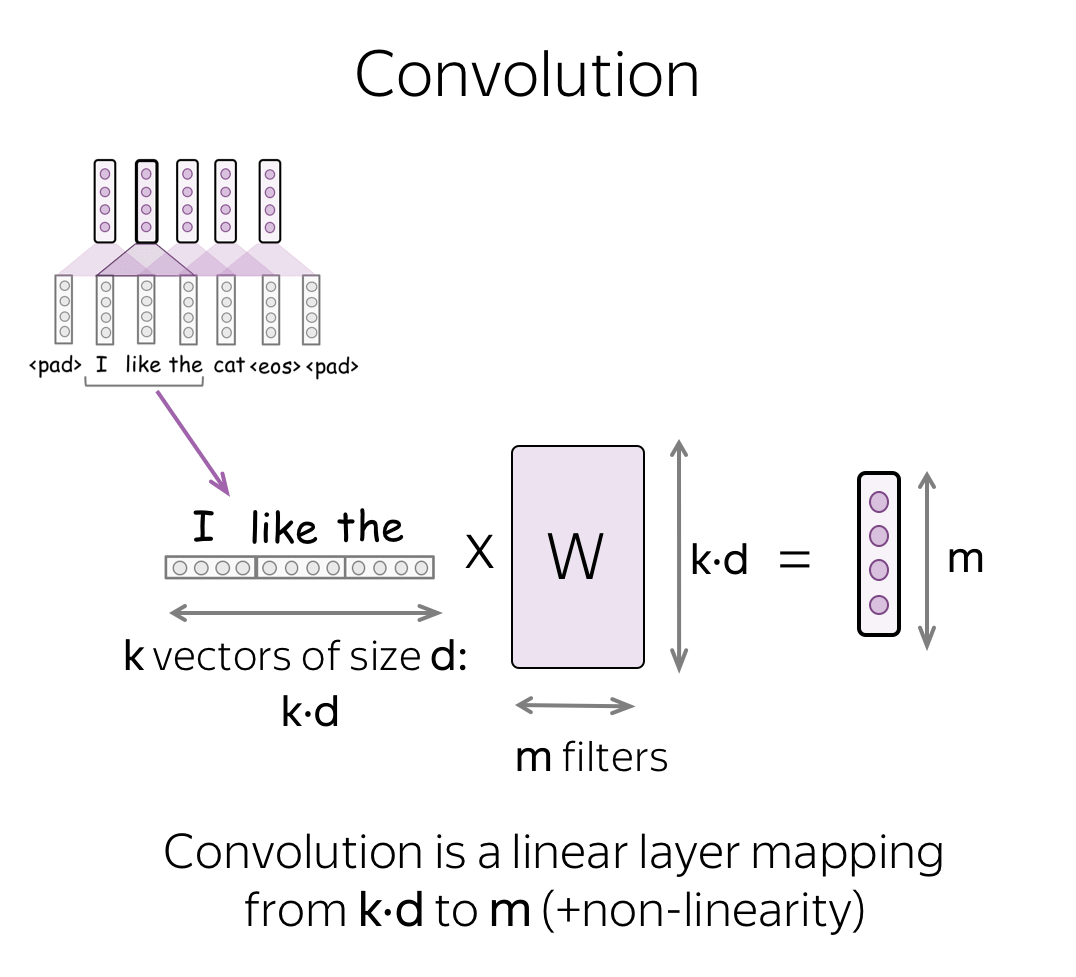

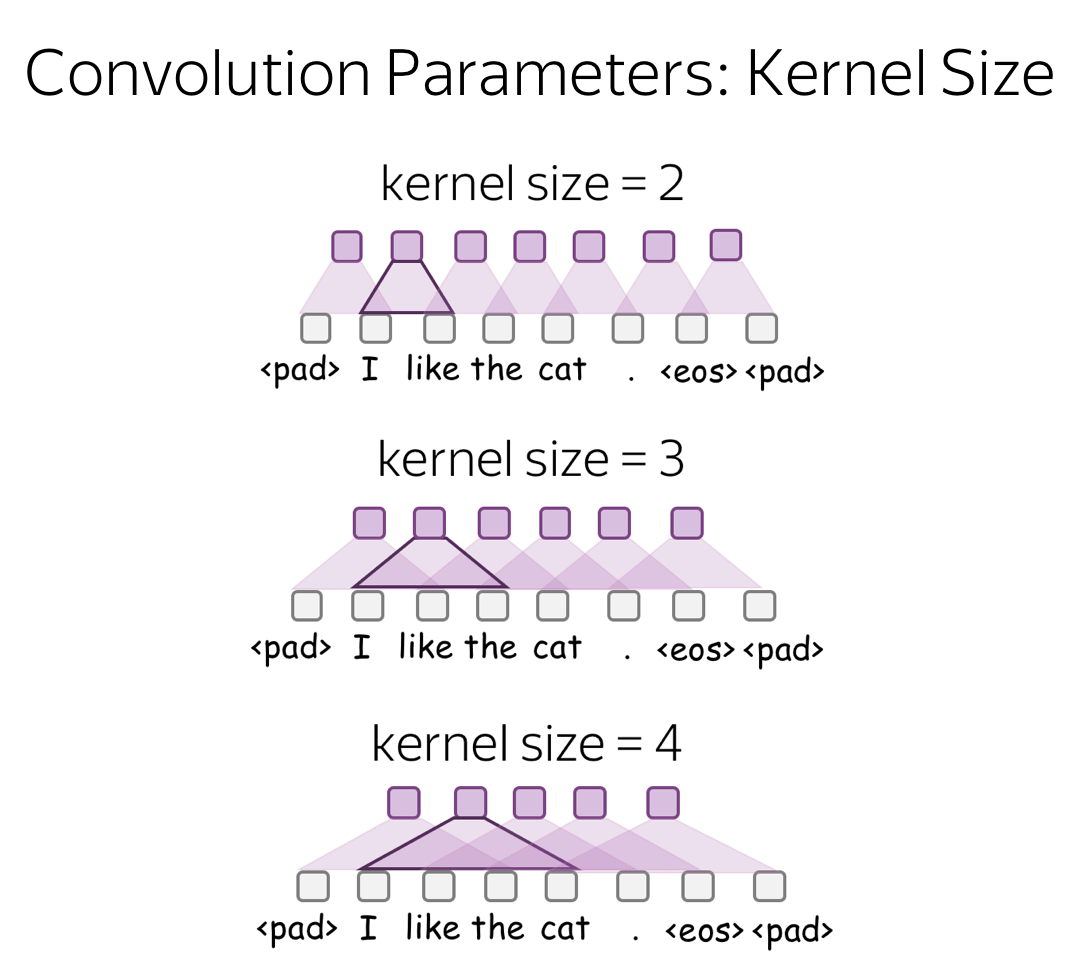

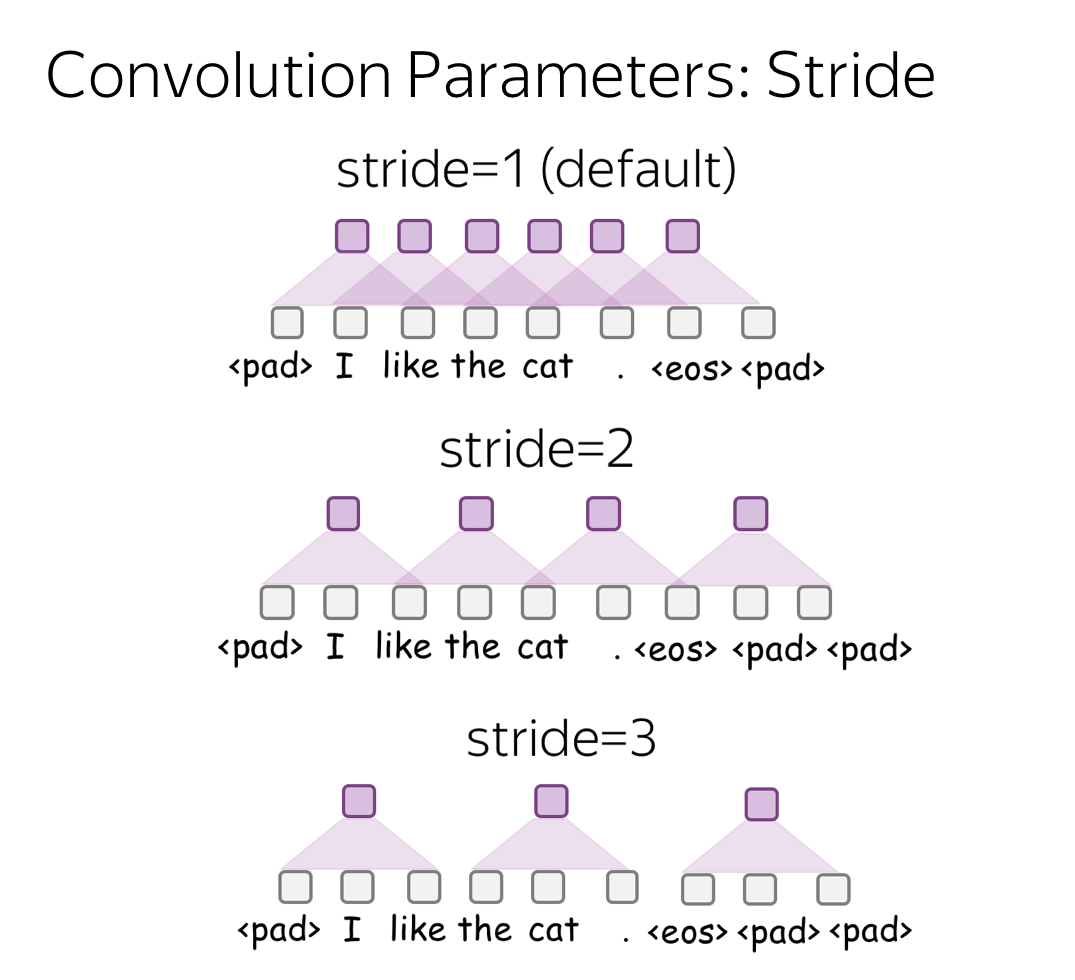

- Building Blocks: Convolution (and parameters: kernel, stride, padding, bias)

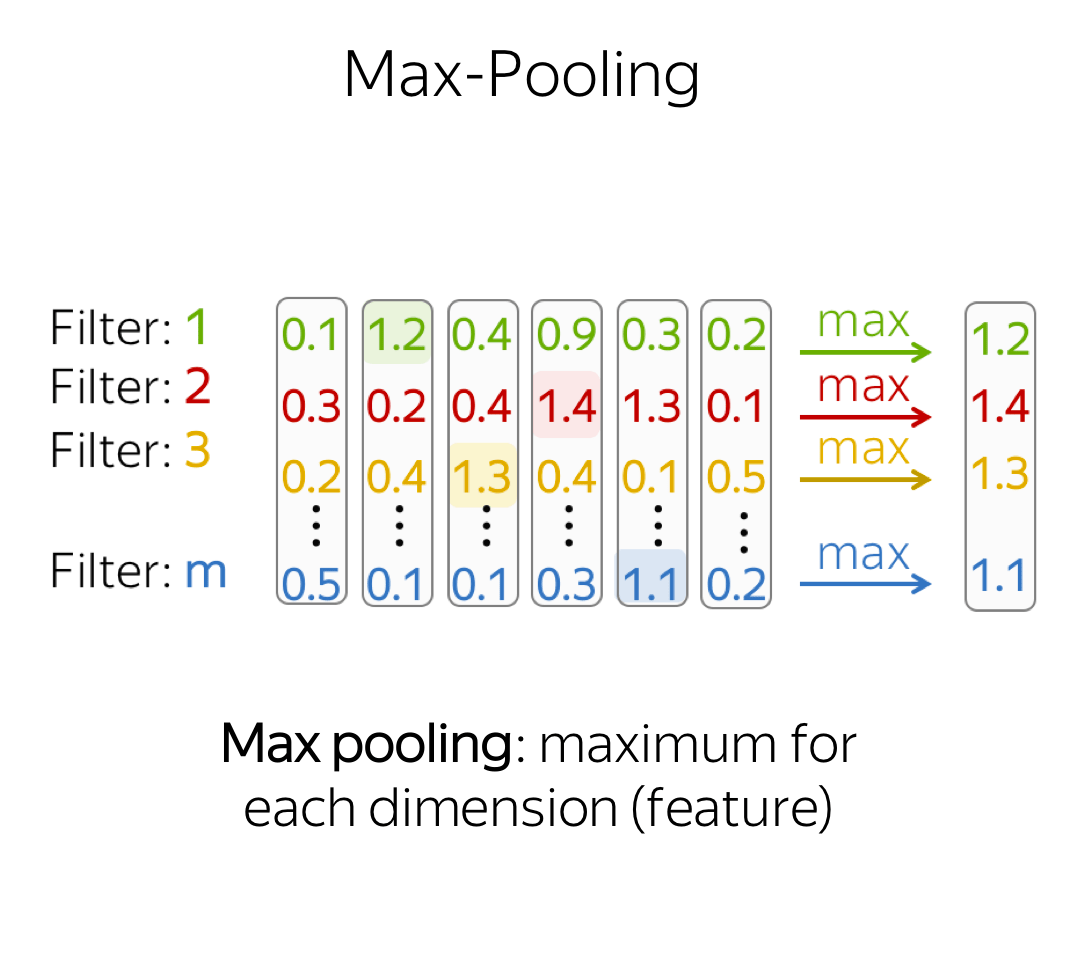

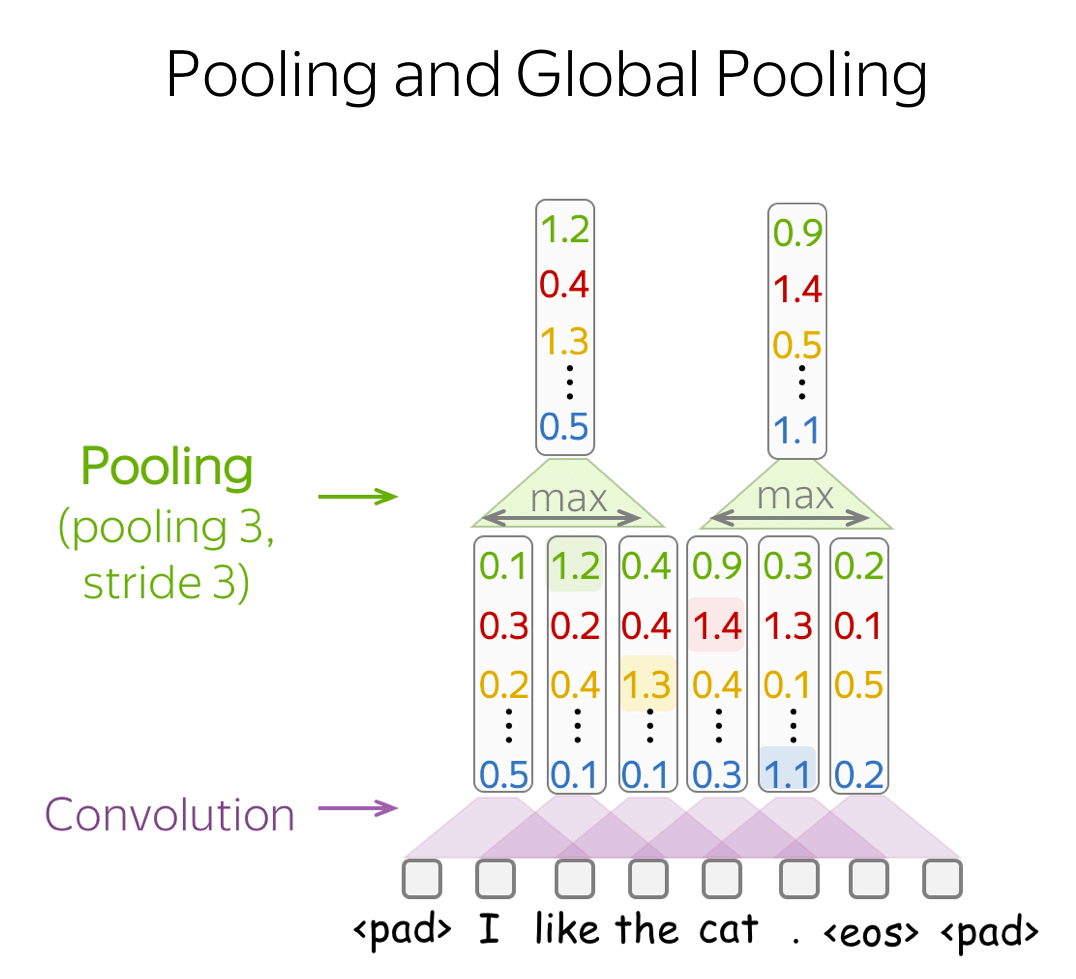

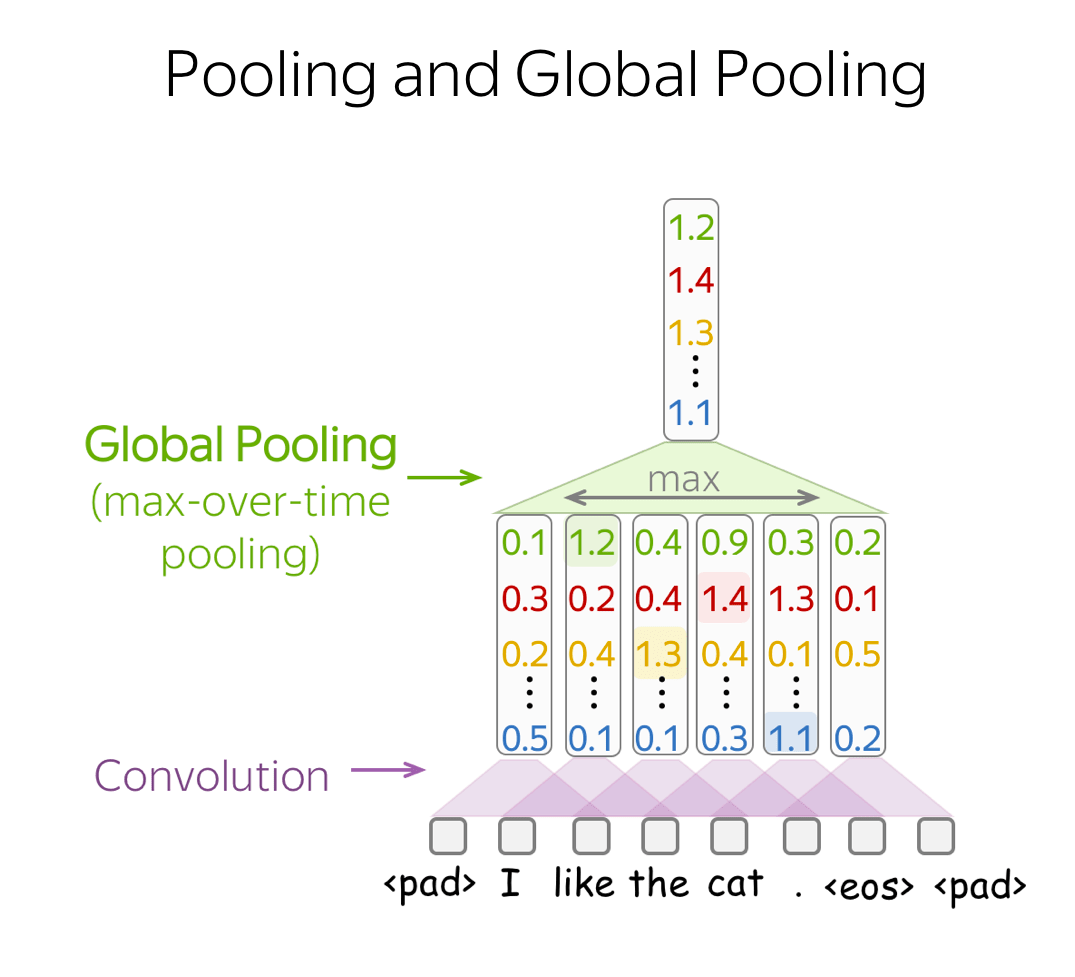

- Building Blocks: Pooling (max/mean, k-max, global)

- CNNs Models: Text Classification

- CNNs Models: Language Modeling

Analysis and Interpretability

Analysis and Interpretability